-

- SDLC Security Overview

- Security Across the Classic SDLC Phases

- Common Vulnerabilities and Attack Vectors in the SDLC

- Foundational Secure-SDLC Practices

- Tooling and Automation Layers

- Frameworks and Standards for Secure SDLC

- DevSecOps Integration

- Metrics and Continuous Improvement

- Advancements in Software Supply Chain Defense

- Roadmap to Secure-SDLC Maturity

- SDLC Security FAQs

Table of Contents

-

What Is AppSec?

- AppSec Explained

- The Fundamentals of AppSec

- Building Security into the Development Lifecycle

- Implementing Secure Coding Practices

- Application Security Testing

- Implementing Security in CI/CD Pipelines

- Securing Application Architecture

- Access Control and Authentication

- Monitoring and Incident Response

- Managing AppSec in Production

- Training and Building a Security-First Culture

- AppSec Trends

- AppSec FAQs

-

What Is Sandboxing?

- Sandboxing Explained

- Sandboxing in Email Security

- Endpoint Sandboxing and EDR

- Browser Isolation and Web Sandboxing

- Sandboxing in Cloud-Native Workflows

- Sandbox Evasion and Threat Actor Tradecraft

- Real-World Case Studies in Sandboxing Effectiveness

- Feeding Sandboxed Intelligence into XDR and SOC Pipelines

- Sandboxing FAQs

-

Application Security: A Practitioner’s Guide

- Application Security Explained

- Types of Applications Organizations Need to Secure

- Whose Job Is It – Developers or Security?

- A Pragmatic Guide for Security-Minded Developers

- Types of Application Security Testing

- Application Security Tools and Solutions

- Compliance Is Not Security, But It’s Not Optional Either

- Application Security FAQs

-

What Is Cloud Detection and Response (CDR)?

- Cloud Detection and Response (CDR) Explained

- How CDR Works

- Key Features of CDR

- CDR and Other Detection and Response Approaches

- How CDR and XSIAM Work Together

- How CDR Addresses Unique Challenges in Cloud Security

- Key Capabilities of CDR

- How CDR Bridges SOC and Cloud Security

- Challenges of Implementing CDR

- CDR Best Practices

- Cloud Detection and Response FAQs

- How to Transition from DevOps to DevSecOps

- Cloud Security Service, Cloud Storage and Cloud Technology

-

How Does VMware NSX Security Work

-

What Is the Software Development Lifecycle (SDLC)?

- Software Development Lifecycle Explained

- Why the SDLC Matters

- Foundational Phases

- Common SDLC Models

- Security and Compliance Integration

- SDLC in Context

- SDLC Challenges

- Choosing or Tailoring an SDLC Model

- SDLC Tooling and Automation

- Version Control and CI/CD Pipelines

- Value-Stream Metrics and Visibility

- Cloud, On-Premises, and Hybrid Considerations

- Best-Practice Guidelines for High-Velocity Delivery

- Next Steps Toward Lifecycle Maturity

- Software Development Lifecycle FAQs

What Is SDLC Security?

5 min. read

Table of Contents

SDLC security embeds threat modeling, policy-as-code gates, and continuous telemetry into every phase of development, requirements through maintenance, to detect and prevent vulnerabilities before production. The practice aligns engineering speed with regulatory assurance, giving executives real-time evidence that each release stays within the organization’s risk budget.

SDLC Security Overview

Software development lifecycle (SDLC) security refers to the structured application of security principles, tools, and safeguards across every phase of the development process. Rather than treating security as a final step or postdeployment concern, modern SDLC security strategies distribute controls from requirements gathering through maintenance. The goal is to prevent vulnerabilities from forming, not just detect them after the fact.

Traditional models separated engineering velocity from risk management. The division, however, creates systemic exposure. Attackers don’t wait for release milestones. They exploit exposed APIs, misconfigured infrastructure, and third-party libraries as soon as code becomes reachable. Effective SDLC security integrates threat modeling, static and dynamic analysis, secure design principles, and runtime enforcement into the flow of development.

A shift-left approach moves prevention closer to the source of change. It turns feedback loops inward, empowering development teams to identify and resolve issues before they propagate downstream. But coverage isn’t enough. Business mandates and regulatory frameworks expect evidence of security controls tied to engineering output. Frameworks like NIST SSDF and ISO 27034 align development security with traceable, auditable practices.

Security within the SDLC mustn’t operate in isolation. Controls at each stage need to inform, constrain, and accelerate the next. Failure to align threat awareness and risk posture across the lifecycle weakens trust, delays delivery, and increases remediation costs. The security architecture must mature with the software architecture.

Security Across the Classic SDLC Phases

Security functions best when it becomes a property of the system. The classic SDLC model provides the scaffolding. Each phase builds on the previous one, and each missed control early on compounds risk downstream. Security must travel with the workflow, rather than arriving after it.

Requirements

Security requirements should be expressed with the same rigor as functional stories. Instead of tacking them on later, teams should codify expectations for threat resilience, data confidentiality, and compliance alignment during feature definition.

Use threat intelligence and regulatory context to shape security user stories. Stories should carry specific acceptance criteria tied to verifiable behaviors. Abuse cases, or hypothetical misuse scenarios, force early visibility into how features could be subverted. Rather than treat them as worst-case distractions, use them to define mitigation pathways. Limit input scope, enforce identity constraints are a few examples. Such criteria then become inputs to the test phase, creating continuity between intent and enforcement.

Planning

Planning aligns risk appetite with delivery strategy, which includes defining the scope of security coverage and establishing a baseline for acceptable exposure across environments.

Security budgeting must include both fixed and variable dimensions. Fixed costs include tools for code scanning, secrets detection, and dependency management. Variable costs scale with team size, system complexity, and integration needs. Resource allocation must also consider ownership across silos. If infrastructure as code governs environment creation, then risk prioritization must account for misconfiguration exposure long before a workload runs.

Security test planning begins here. Coverage plans for static, dynamic, and composition analysis belong in the delivery forecast, not as last-minute tasks.

Design

Design is the control point where architecture either enables secure behavior or cements systemic weaknesses. Threat modeling, properly practiced, should shape key decisions. Skip it, and teams risk hardwiring attack paths into the blueprint.

Begin with data flow diagrams. Map trust boundaries, privilege levels, and input sources. Highlight areas with dynamic interpretation, external input, or lateral trust dependencies. Tie each risk to known controls and define verification paths. Choose protocols and cipher suites explicitly. Never assume downstream enforcement. Default to secure configurations even when internal.

Security architecture should align with the threat model, not just the deployment diagram. A zero-trust assumption at this stage allows designs to avoid overreliance on implicit boundaries, network trust, or centralized brokers.

Implementation

Coding is where policy must become behavior. Without enforced guardrails, developer intent alone can’t sustain security posture.

Secure coding standards should exist at the language and framework level. Static code analyzers should flag violations early, but those tools work best when tied to team-enforced policies — policies that include explicit input validation, type safety, memory management, and proper API use. Treat dangerous functions and pattern-level anti-patterns as break conditions, not advisory warnings.

Secrets must never reside in source code. Implement pre-commit checks, repository scanning, and build-time enforcement. Rotate credentials based on role, scope, and lifecycle. Validate that code checks in as signed commits with auditable provenance.

Security reviews should focus less on catching bugs and more on identifying design mismatches and systemic risk debt.

Testing

Security testing validates not only code correctness but exposure containment and policy effectiveness.

Use static analysis (SAST) to detect unsafe code paths, tainted input flow, and insecure function use. Combine with dynamic analysis (DAST) to simulate adversarial behavior across APIs and web frontends. Software composition analysis (SCA) must run in parallel, resolving all dependencies to detect vulnerable libraries and license violations.

Go beyond pass/fail scans. Introduce mutation testing to inject logic perturbations and confirm the presence of defensive behaviors. Use fuzzing to uncover parsing weaknesses. Instrument tests to verify logging, failure modes, and runtime enforcement.

Security tests must map directly back to requirements and design assumptions. Gaps should trigger remediation cycles or architecture updates, not manual exception handling.

Deployment

Security loses context when deployment becomes a separate domain. To maintain traceability and control, security must ride with the artifact.

Sign every build artifact. Record its hash, origin, and build context. Use attestations to prove toolchain integrity. Enforce policy checks on infrastructure as code before provisioning. Define allowlists for cloud resources and enforce constraints using OPA or native cloud policy engines.

Treat pipelines as targets. Audit for misconfigured runners, unscoped tokens, and unsecured containers. Require ephemeral environments and immutable containers to minimize attack surfaces. Avoid postdeployment patches to secure pipelines. Harden by design.

Shift scanning and validation left, but gate deployments right. Don't confuse faster delivery with looser enforcement.

Maintenance

Operational exposure changes daily. Security’s final burden isn’t closing the loop but keeping it open.

Patch management must follow structured service-level objectives. Prioritize patches based on runtime observability, exploitability scores, and business criticality. Automate ingestion of threat feeds to trigger real-time patch triage but avoid blind CVSS-based prioritization.

Continuous monitoring requires security telemetry at every layer. Collect signals from identity systems, infrastructure controls, runtime agents, and API gateways. Correlate those against behavioral baselines, drift detection, and cloud audit logs. Treat security alerts as product signals, not just SOC noise.

Link findings back into planning and requirements. What you learn from production should redefine what you build next.

Common Vulnerabilities and Attack Vectors in the SDLC

Attackers no longer wait for an application to reach production. They target development systems, pipelines, and tooling with the same intent and sophistication once reserved for perimeter defenses. SDLC security must account for these upstream exposures or risk delivering compromise by design.

DevOps Toolchain Misconfigurations

DevOps pipelines are powerful, often overprivileged, and sometimes blind to their own attack surface. Misconfigurations in orchestration systems, CI/CD platforms, or infrastructure provisioning tools create reliable footholds for adversaries.

Default configurations often permit unscoped credentials, unrestricted shell access, or overly permissive IAM roles for build runners. Those flaws become entry points into the software factory. If a pipeline tool runs as a high-privilege service account across environments, a single exploited container can inherit full control of build artifacts, environment variables, and deployment targets.

To reduce exposure, enforce least privilege access on service identities, segment build agents, and require explicit approval steps for privilege escalation. Scan container base images and tool dependencies with the same rigor applied to production software. Every tool in the chain must comply with the same controls required of the code it ships.

Code Tampering and Source-Code Leaks

In addition to store business logic, source repositories store configuration values, deployment manifests, API specifications, and authentication flows.

Attackers use token-harvesting techniques to gain access to Git platforms, either through phishing, OAuth abuse, or supply chain impersonation. Once inside, they may insert malicious code, alter build configurations, or exfiltrate sensitive logic for downstream exploitation.

Tampering becomes difficult to detect in high-velocity environments without proper commit signing, branch protection, and immutable audit logging. All contributions must be verified cryptographically. Repository access should be gated by fine-grained access policies, enforced via single sign-on, and backed by just-in-time permissions. Secrets scanning must occur precommit.

The consequences of a tampered source go beyond runtime impact. Compromised software can pass QA, enter production, and propagate into customer environments unnoticed. Once released, remediation costs multiply.

Dependency and Supply-Chain Attacks

Modern software is assembled more than written. Each service, library, or framework introduced via package managers or container registries becomes a potential threat vector.

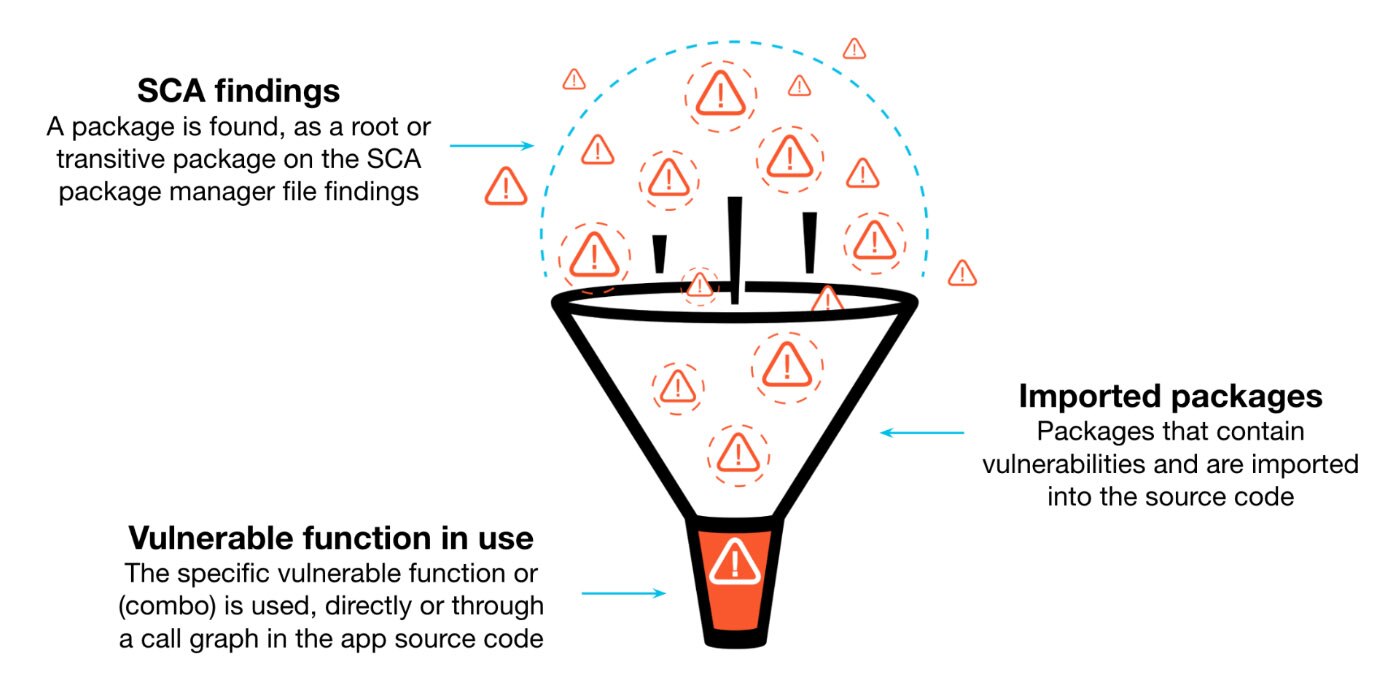

Figure 1: Open-source packages commonly introduce vulnerabilities.

Attackers inject malicious code into widely used packages, often under the guise of benign updates or typo-squatted names. In many cases, the malware activates only in specific build environments, evading casual inspection and triggering payloads during runtime or during CI workflows.

Unpinned versions, broad wildcard constraints, and implicit transitive dependencies amplify risk. Even when direct dependencies are audited, few teams monitor nested layers or verify artifact provenance.

To secure the supply chain, enforce allowlists of verified packages and registries. Use software bill of materials (SBOMs) to track dependency trees and monitor them for vulnerability disclosures. Apply reproducible builds and artifact signing to confirm that consumed code matches expected behavior.

An effective SDLC security program must treat dependency risk as first-order.

Foundational Secure-SDLC Practices

Embedding security from the earliest planning artifacts to postrelease operations requires far more than scattered controls. It demands a codified system of technical standards, design discipline, and cultural reinforcement.

Governance and Security Standards

Governance defines what good looks like. Without it, development teams operate in fragmented security models. Secure SDLCs formalize control objectives, code hygiene rules, threat mitigation patterns, and compliance mandates into enforceable policy sets.

Standards must be explicit, versioned, and tied to technical reference implementations. They should cover language-specific rules for memory safety, parameter validation, authentication patterns, and cryptographic operations. Tooling enforces them across pipelines through static checks, IDE plugins, and policy gates.

Program success hinges on operationalizing governance, which means applying risk-based policy exceptions, publishing reference projects, and measuring adoption across teams.

Proven Frameworks, Languages, and Libraries

Baseline security improves when teams standardize on curated, enterprise-approved technology stacks. Restricting development to vetted programming languages and libraries limits the attack surface and ensures predictable behavior under scrutiny.

Language decisions affect memory safety, concurrency behavior, input handling, and resilience to undefined states. Preferred frameworks should follow the principle of secure by default, avoid dynamic code evaluation, and minimize implicit behavior. Only libraries maintained by trusted maintainers with verifiable provenance and recent activity should enter the ecosystem.

Environments must restrict unauthorized package installs and enforce version pinning to reduce drift and support reproducibility.

Threat Modeling and Design Review

Security can’t be strapped onto a broken design. Threat modeling and architectural review reveal systemic flaws before they reach implementation.

Threat models identify trust boundaries, validate authentication flow, and predict potential abuse paths. Engineers should simulate attacker perspective, map data flow against misuse cases, and trace privilege transitions across services.

Design reviews verify conformance to platform-wide architecture patterns. Reviewers check for encryption gaps, unsanitized entry points, broken access controls, and mutable states shared across contexts. Every major feature or architectural revision should trigger a repeat review cycle.

Models must evolve as code evolves. Version control for threat models is as important as it is for code.

Cryptography Policy

Teams must not invent cryptography. That’s a principle, not a suggestion.

Cryptographic policy defines authorized algorithms, key lengths, cipher modes, and implementation sources. It prohibits use of deprecated or weak algorithms and blocks direct use of cryptographic primitives in favor of high-level, tested APIs.

Key material must be generated, stored, rotated, and revoked using hardware-backed or cloud-native key management services. Systems must avoid hardcoding secrets, transmitting them over unencrypted channels, or reusing keys across tenants or environments.

Policy enforcement occurs through linters, secrets scanners, and CI checks on encryption-related code paths.

Supply-Chain and Engineering-Environment Hardening

A secure SDLC can’t rely on compromised tooling or untrusted inputs.

Hardening begins with the build environment. Developers must operate in isolated sandboxes. Build agents require ephemeral workloads, tight scoping of credentials, and immutability of artifacts. Code signing should occur within controlled environments, triggered only after passing all tests and approvals.

The supply chain must be cataloged through SBOMs. Verified sources, reproducible builds, and signature validation of packages and containers are mandatory. Artifact repositories must reject unsigned or unverified uploads. Only internal registries should be trusted as install sources.

Source control systems must enforce branch protection, multifactor authentication, fine-grained access policies, and audit logging.

Comprehensive Security Testing

Security testing must extend beyond unit and integration coverage. It must probe behavior under malformed input, invalid state, and adversarial assumptions.

The SDLC should apply multiple testing layers:

- Static analysis to enforce code quality and spot risky constructs

- Dynamic analysis to simulate runtime conditions and validate error handling

- Software composition analysis to track third-party vulnerabilities

- Fuzzing and mutation testing to detect unanticipated edge-case failures

Coverage metrics must go beyond functional logic to incorporate security-relevant paths. Every critical asset interaction, authorization check, and cryptographic operation should appear in test coverage reports.

Operational Platform Security, Monitoring, and Response

Deployment isn’t the end of security responsibility. A secure SDLC must plan for detection, containment, and post-incident learning.

Cloud platforms must follow the principle of least privilege, particularly as it applies to denying outbound traffic by default, enforcing workload identity, and attaching minimal runtime permissions. Network policies must constrain lateral movement and protect management interfaces.

Monitoring must track indicators of compromise — unexpected file access, container escapes, permission elevation, and deviation from known-good baselines.

Response plans should integrate with incident workflows, including rollback procedures, forensic data collection, and notification criteria. The SDLC must support instrumented builds that emit meaningful telemetry and maintain audit traceability across services.

Security Education and Culture Building

Secure software comes from informed engineers. Programs that rely solely on security gatekeepers fail at scale.

Training must evolve past annual compliance slides. Engineers need access to contextual, on-demand learning tied to their codebase. Training should incorporate secure design patterns, misuse case studies, and architecture-specific deep dives.

Security teams should publish internal guidance, maintain annotated code examples, and run feedback loops to learn from code review findings. Regular red-teaming, gamified capture-the-flag challenges, and office hours build awareness into daily work.

Culture shifts when engineers take pride in threat modeling, celebrate security findings, and treat secure code as craft.

Tooling and Automation Layers

Contrary to point-in-time audits, security tooling must function as an always-on system of accountability. Effective security engineering relies on precise automation embedded across pipelines, staging gates, and deployment workflows.

Static and Composition Analysis in CI

Security begins where code originates. SAST identifies syntactic patterns and logic errors that lead to injection flaws, insecure deserialization, or improper input handling.

To succeed in CI, static scans must be fast, incremental, and policy aware. They must support branch-level suppression tracking and provide actionable, context-rich findings that developers can triage within pull requests.

Software composition analysis complements static testing by evaluating open-source dependencies. It surfaces known vulnerabilities, licensing conflicts, and unsafe transitive packages. SCA must pin versions, flag malicious packages, and enforce per-repo allowlists.

CI tooling should reject unsafe merges based on rule violations and enforce baseline risk thresholds before test execution even begins.

Dynamic and Fuzz Testing in Staging

Static analysis can’t detect runtime misbehavior. Staging environments must support dynamic application security testing (DAST), behavior fuzzing, and instrumentation-based analysis.

DAST tools simulate external attacker behavior, scanning for response anomalies, injection vectors, and authentication bypasses. Fuzzers inject malformed, randomized, or edge-case input into APIs, protocols, or user flows to trigger unintended states or resource exhaustion.

In cloud-native architectures, service-level fuzzing must extend beyond API surface to include event-driven systems, message queues, and function triggers.

Dynamic analysis must integrate into pipeline staging steps, automatically deploy test environments, and produce security gate signals for subsequent delivery stages.

Automated Canary Analysis and Progressive Delivery Guards

Modern deployment strategies like canary, blue-green, and rolling enable granular risk gating at runtime. Security instrumentation must evolve with those delivery models.

Canary analysis platforms use statistical telemetry, log anomaly detection, and error budget consumption to automatically compare baseline and experimental versions. Security signals must feed into those platforms.

Progressive delivery systems should reject promotion if telemetry indicates drift in authentication failures, unexpected outbound traffic, or crash signatures aligned with prior vulnerability classes.

Security engineers must define blocking thresholds tied to functional service-level indicators, not just performance. For example, sudden surges in access-denied responses from a single identity region should halt rollout.

Progressive delivery guards reinforce zero-trust principles by combining deployment velocity with conditional observability and rollback logic.

SBOM Generation, Sigstore/Cosign Signing, In-Toto Provenance

Software provenance is critical for establishing trust, preventing tampering, and enabling breach investigations. Security programs should treat build transparency as a first-class control surface.

SBOMs enumerate dependencies, their versions, licensing, and origin metadata. SBOMs should follow SPDX or CycloneDX formats and embed into artifacts as attestations. CI tools should autogenerate them per build and publish alongside outputs.

Sigstore and Cosign provide open tooling for signing container images and verifying signatures against public keys. Signing must occur in ephemeral, policy-constrained environments using short-lived credentials.

In-toto extends provenance tracking by capturing metadata across the full SDLC, chaining signatures to attest what code, tests, tools, and actors were involved in building and releasing each artifact. The chain allows consumers to verify integrity against tampering or drift.

Tooling must enforce signature verification at deployment time and maintain audit logs for compliance traceability.

Frameworks and Standards for Secure SDLC

Security initiatives that rely solely on tools or tribal knowledge degrade over time. Frameworks codify expectations, provide benchmarks, and force long-horizon thinking. Effective organizations adopt, tailor, and operationalize these standards across teams and vendors.

NIST Secure Software Development Framework (SSDF)

The NIST SSDF (Special Publication 800-218) provides a baseline of practices for producing secure software. It organizes its guidance into four functional groups:

- Prepare the Organization

- Protect the Software

- Produce Well-Secured Software

- Respond to Vulnerabilities.

SSDF emphasizes artifact traceability, formal policies, and secure-by-design defaults. It calls for documenting toolchain requirements, enforcing configuration integrity, and verifying third-party code against security criteria before integration.

Unlike checklists that encourage compliance theater, SSDF expects repeatable processes. It requires evidence of enforcement, not intent. Internal teams must demonstrate that activities such as code review, access control, and tamper detection happen predictably and measurably.

SSDF aligns with Executive Order 14028 and Federal Acquisition Regulation updates, making it a procurement requirement for many public-sector and adjacent vendors. For cloud-native organizations, SSDF offers structure for securing ephemeral workloads, shifting verification left, and proving trustworthiness to customers and regulators.

Related Article: Security Theater: Don’t Hang your Hat on Compliance

OWASP SAMM and ASVS

The Software Assurance Maturity Model (SAMM) focuses on how teams build software, not just what they build. It evaluates maturity across 12 security practices such as design review, defect management, and education.

SAMM supports incremental adoption. Organizations can benchmark current maturity, define target levels, and prioritize improvements that match resources and risk posture.

The Application Security Verification Standard (ASVS) defines security control objectives for applications at three increasing levels of rigor. It includes detailed guidance on authentication, session management, data protection, and business logic.

Unlike SAMM, ASVS focuses on what software must achieve rather than how teams achieve it. It enables security teams to define acceptance criteria for dev teams, measure test coverage, and identify gaps in enforcement.

SAMM and ASVS are especially effective when paired. SAMM reveals process weaknesses, while ASVS validates whether controls exist in the product. Both frameworks encourage security ownership within development workflows rather than imposing it externally.

Supply Chain Levels for Software Artifacts (SLSA)

SLSA (pronounced “salsa”) is a security framework for protecting the integrity of software supply chains. It defines four progressive levels of assurance for build provenance, tamper resistance, and artifact integrity.

At Level 1, a project must support basic build script automation. Level 2 requires signed provenance and build service isolation. Level 3 demands that all builds occur in a fully auditable, nonfalsifiable environment. Level 4 enforces reproducible builds with two-person code review and cryptographic validation.

SLSA offers a path toward defensible provenance. It formalizes expectations around build system hygiene, dependency curation, and trusted distribution channels. The framework emerged from Google's internal Binary Authorization and has been adopted by OpenSSF as a shared language for secure software pipelines.

Organizations that adopt SLSA begin by instrumenting artifact signing, introducing ephemeral CI runners, and publishing SBOMs. Over time, they shift toward hermetic builds and enforce chain-of-custody policies that reduce insider risk and third-party compromise.

Related Article: Ungoverned Usage of Third-Party Services

DevSecOps Integration

Security at scale requires more than automation. It requires orchestration across people, policy, and pipelines. DevSecOps applies infrastructure as code and continuous integration principles to security enforcement, enabling security to move at the pace of development without sacrificing precision or accountability.

Policy as Code Pipelines

Policy as code allows organizations to define security, compliance, and operational guardrails as executable logic rather than human-readable documents. These policies are versioned, peer-reviewed, and enforced in CI/CD workflows.

Common policy engines include Open Policy Agent (OPA) for general-purpose rules and Conftest for file-based validations. Tools such as these evaluate configuration files, infrastructure manifests, and container definitions before deployment. Violations can block builds, flag issues for triage, or log exceptions with traceability.

By embedding policy into build pipelines, organizations reduce the risk of drift between dev, test, and prod. Policy as code ensures that decisions around encryption enforcement, identity scope, and network exposure aren’t subject to operator memory or spreadsheet checklists.

Policy logic must be auditable, testable, and portable. Mature teams maintain policy repositories alongside application code, with rules tied to compliance controls such as CIS Benchmarks, NIST 800-53, or internal standards. This enables consistent enforcement across Kubernetes clusters, Terraform stacks, and Helm charts.

GitOps for Security Drift Remediation

GitOps enforces desired state by syncing infrastructure from declarative source repositories. It treats Git commits as the source of truth and uses agents like ArgoCD or Flux to reconcile live environments to match intended configurations.

For security teams, GitOps introduces a way to detect and resolve drift without relying on alert triage. If an engineer manually changes a pod security policy, for example, the GitOps controller rolls it back automatically unless the change is codified and approved in Git.

Drift remediation becomes deterministic. Teams stop chasing unapproved firewall changes or excessive privilege grants across environments. Instead, they enforce immutability through automated reconciliation and track changes with Git-based audit logs.

GitOps aligns with zero trust models by reducing implicit administrative paths. All change activity becomes explicit, reviewable, and revertible. Combined with policy as code, GitOps ensures that even corrective security actions follow controlled, traceable flows.

Continuous Compliance Evidence

DevSecOps, while preventing violations, generates continuous evidence that controls are enforced. Every policy evaluation, build signature, and deployment decision produces artifacts that can be traced back to the policy, code, and the actor that triggered it.

Pass/fail logs, attestation signatures, SBOM hashes, and Git commit metadata and more form the backbone of modern compliance audits. Instead of relying on quarterly sample screenshots, auditors can review CI/CD telemetry that shows exactly when and how each control was enforced.

Evidence is most useful when structured and queryable. Mature teams use frameworks like in-toto or Gitoogle's Binary Authorization to link artifact lineage to policy outcomes. Others use security information and event management (SIEM) systems to ingest policy engine logs, build telemetry, and deployment traces as compliance signals.

The goal isn’t just visibility. It’s proving enforcement without halting innovation. Continuous compliance lets engineering scale without degrading security posture, while giving security leadership the visibility needed to meet regulatory and contractual obligations.

Metrics and Continuous Improvement

Effective SDLC security demands empirical validation that security outcomes improve over time without impeding delivery. Metrics must connect engineering activity with security posture, operational risk, and business velocity.

DORA plus Security KPIs

The four DORA metrics — deployment frequency, lead time for changes, change failure rate, and mean time to recovery — established a foundation for measuring DevOps performance. On their own, they offer no insight into whether rapid delivery aligns with secure delivery.

To close that gap, security leaders now extend DORA baselines with SDLC-specific indicators. These include:

- Percentage of builds passing security gates on first attempt: Signals code quality and security alignment upstream.

- Mean time to remediate critical vulnerabilities postdiscovery: Measures responsiveness and patching efficiency.

- Rate of policy violations per deployment artifact: Tracks hygiene drift over time.

- Volume of unreviewed or unmerged security PRs: Reflects backlog stress or cross-team friction.

Correlation across DORA and security KPIs helps identify tradeoffs. For example, higher deployment frequency paired with rising security gate failures may indicate a breakdown in developer enablement or tooling coverage.

Value-Stream Flow Efficiency

Value-stream metrics quantify how efficiently security activities integrate into delivery flows. Rather than focusing on tickets closed or code scanned, they evaluate the end-to-end path from issue detection to resolution.

Flow efficiency measures the ratio of active work time to total elapsed time. Insecure dependencies might be identified in minutes but take weeks to resolve due to misaligned team ownership, approval bottlenecks, or missing context.

Another signal is queue aging — how long security issues remain in backlog states without progress. High average age on risk-tagged issues often indicates disconnects between AppSec and product teams or a lack of actionable prioritization.

Security-aware value-stream mapping exposes hidden waste. It allows leaders to quantify friction introduced by manual handoffs, overloaded review queues, or context switching. Streamlining these choke points reduces breach exposure and delivery lag.

Security Backlog Triage and Risk Burn-Down

A mature SDLC security program avoids alert fatigue by continuously refining triage and resolution. Risk burn-down tracking shows how much high-priority exposure remains unresolved and how quickly it's being reduced over time.

Every ticket in the security backlog must be tagged with impact severity, exploitability, and remediation effort. Triage workflows should route low-severity findings through automation or collective suppression, while reserving human analysis for exploitable paths with production reach.

Effective backlog metrics include:

- Ratio of exploitable-to-informational findings: Indicates scanning precision and triage overhead.

- Average time from triage to fix for exploitable issues: Reflects velocity of risk reduction.

- Percentage of backlog addressed by automated remediation: Measures investment payoff in orchestration.

Security maturity isn’t measured by how many vulnerabilities you find. It’s measured by how effectively you resolve the right ones at the right time, without slowing the system you strive to protect.

Advancements in Software Supply Chain Defense

Modern SDLC security extends beyond static control checklists and policy frameworks. The most forward-leaning organizations incorporate zero-trust principles into the development environment, pressure-test controls using adversarial simulation, and apply machine learning to accelerate secure decision-making.

Zero-Trust Build and Release Environments

Zero-trust principles are no longer reserved for user access and network segmentation. They now extend into CI/CD pipelines, where each interaction, tool, and asset must authenticate and authorize with scoped credentials and time-limited trust.

Build agents must operate in isolated, ephemeral environments with no persistent secrets or outbound access by default. All code dependencies must be fetched from authenticated, verified sources with integrity guarantees. Any attempt to reuse or modify build instructions outside versioned IaC should trigger a failure.

Related Article: Dependency Chain Abuse

To enforce traceability, each stage of artifact creation must append verifiable provenance data. Developers shouldn’t be able to bypass controls, disable signing, or overwrite audit trails. Systems such as Sigstore, in-toto, and SLSA attestations formalize these guarantees and enable third-party validation of build integrity.

Zero trust in CI/CD also includes granular RBAC in orchestration platforms, mandatory approvals tied to identity, and alerting on suspicious command invocation, such as unexpected system-level tooling within container builds.

Chaos Engineering for Security Controls

Just as chaos testing validates fault tolerance under stress, it now plays a growing role in testing SDLC security assumptions. Security chaos engineering introduces controlled failure modes to simulate attacker behavior, test control resilience, and quantify blast radius.

For example, simulate a compromised CI token with scoped credentials and verify whether lateral movement into secrets stores or release systems is blocked. Introduce signed but intentionally malicious artifacts into the package registry and monitor whether validation gates detect tampering. Temporarily disable a known security control and trace whether detection logic or layered mitigations prevent impact.

The goal of course isn’t to cause failure. Teams must surface brittle assumptions such as incomplete visibility across release steps or undocumented credential reuse. Chaos experiments should be codified, replayable, and incorporated into security test stages alongside functional and performance tests.

Security observability must extend to failure diagnostics. If a chaos test corrupts a build and no alert fires, the issue lies not in the test but in the telemetry blind spots it exposed.

AI-Assisted Code and Policy Review

Large language models and transformer-based classifiers now contribute to security across the SDLC by accelerating the review of both source code and declarative policy. Unlike traditional rule-based linting, AI-assisted tools contextualize structure, intent, and historical patterns to prioritize real defects.

For source code, AI can flag unsafe method use, improper authentication flows, or cryptographic misuse even when code lacks recognizable signatures. It can explain vulnerabilities in developer-native language and propose context-aware remediations that align with project conventions.

For policy as code, AI-assisted review can identify logic gaps, unreachable paths, overly permissive access, or unintended consequences. It can also map policy coverage against runtime behavior to highlight policy drift.

AI review systems should never operate autonomously. Their value lies in augmenting expert oversight, reducing fatigue, and freeing human reviewers to focus on complex, high-signal issues. The most advanced deployments integrate AI outputs into PR workflows, correlate findings with production logs, and continuously learn from remediation patterns.

Roadmap to Secure-SDLC Maturity

No organization hardens its development lifecycle by accident. Secure-SDLC maturity requires deliberate progression, operational alignment, and defensible measurement. Every phase must support the next, with clear traceability from initial gaps to implemented safeguards and measurable outcomes.

Baseline Assessment and Gap Analysis

Security maturity begins with an informed reckoning. A defensible SDLC security assessment must examine process-level enforcement, technical coverage, and execution fidelity. Frameworks like OWASP SAMM provide structure but can’t substitute for deep analysis of tool integration, control enforcement, and development behavior.

Scanners without alert consumption don’t reduce risk. Controls that block deployments without transparent resolution paths stall delivery. The assessment must surface both overengineered deadweight and underprotected attack paths. Asset inventory, system architecture, dependency tracking, threat modeling, testing, and patching all require scrutiny — not as independent capabilities but as a continuum of coverage.

Pilot Projects and Quick Wins

Early success requires precision. Teams should avoid platform-wide rollouts until they validate key controls in scoped pilot environments. Ideal pilot candidates include high-change services with moderate risk and a known release cadence. These contexts expose process friction and tool gaps without introducing disproportionate operational risk.

Quick wins might include tightening branch protections, automating secrets scanning, or introducing in-pipeline SCA enforcement. But they must connect to outcome metrics. A control added but never triggered doesn’t build maturity. Pilot phase outcomes should quantify issue detection, resolution latency, and developer participation in root cause analysis.

Each improvement must reduce cycle time or demonstrably reduce security risk. Success should build confidence in further automation and broader rollout.

Enterprise Rollout, Training, and Governance Model

Enterprise adoption requires operational rigor, executive commitment, and persistent enablement. Security tooling must interoperate with existing developer workflows across CI/CD platforms, version control systems, and ticketing infrastructure. Without reliable integration, controls either get bypassed or ignored.

Training programs must evolve past passive awareness. Role-based sessions should address the specific decisions, tools, and responsibilities of engineers, architects, and product managers. Secure code practices must be tied to real defects observed in the organization’s own environment, not generic industry examples.

Governance models must define how security standards propagate, how exceptions get approved, and how outcomes get audited. Compliance drift must trigger continuous monitoring, and unresolved risks must escalate in ways that engineering leadership can’t ignore.

SDLC Security FAQs

A pre-commit hook is a script that runs automatically before a developer's code is committed to version control. In secure development, pre-commit hooks help enforce coding standards and prevent security regressions by checking for secrets, enforcing linting rules, scanning for known vulnerabilities, or blocking risky patterns before they enter the repository. Because the validation happens locally, it improves feedback speed and reduces the chance of flawed code propagating through the toolchain.

Artifact poisoning is the malicious alteration or substitution of software artifacts during or after the build process. Attackers may inject backdoors, overwrite trusted outputs, or swap legitimate files with tainted ones to compromise runtime environments or downstream systems. Without cryptographic integrity checks and trusted provenance, poisoned artifacts can enter production undetected, especially in complex CI/CD pipelines.

Differential fuzzing tests multiple implementations of the same functionality with identical randomized inputs and compares the outputs for inconsistencies. It's particularly effective when uncovering flaws in security-sensitive components such as cryptographic libraries or parsing engines. If two compliant implementations behave differently under the same inputs, the divergence may indicate a bug or a potential vulnerability.

A side-channel attack exploits indirect signals to extract sensitive information from a system. Rather than targeting a functional bug, the attacker observes how a system behaves while executing secure operations. In application security, even subtle differences in response times or error handling can reveal keys, credentials, or application logic when repeatedly measured under controlled input conditions.

Secure-by-design refers to embedding security principles during the architectural and design stages of software development. It anticipates threats and builds mitigations into the core structure. Secure-by-default, on the other hand, means that once deployed, the system’s out-of-the-box configuration prioritizes safety without requiring additional tuning. While secure-by-design governs how the software is conceived, secure-by-default governs how it behaves without administrator intervention.

Software tamper detection is the ability of an application or platform to recognize unauthorized modifications to its code, configuration, or runtime behavior. Techniques include checksums, cryptographic signatures, memory integrity verification, and runtime attestation. Effective tamper detection helps identify attempts to bypass security controls, alter application flow, or inject malicious payloads, particularly in environments where software may run in untrusted contexts.

Policy orchestration in CI/CD ensures that security, compliance, and operational policies are applied consistently and automatically across development pipelines. It coordinates multiple enforcement points so policies operate as a unified control system rather than siloed checks. Policy orchestration prevents insecure builds from advancing, enforces separation of duties, and provides traceability across toolchains.