- 1. How does federated learning work?

- 2. Why is federated learning considered privacy-preserving?

- 3. What are the main challenges of federated learning?

- 4. Where is federated learning used today?

- 5. How is federated learning evolving?

- 6. How does federated learning impact AI security?

- 7. Federated learning FAQs

What Is Federated Learning? A Guide to Privacy-Preserving AI

Federated learning is a distributed method for training machine learning models in which multiple devices or organizations collaboratively learn a shared model without transferring their raw data.

Each participant trains the model locally on its own dataset and shares only model updates or parameters with a central server. The server securely aggregates these updates to improve the global model while keeping individual data private.

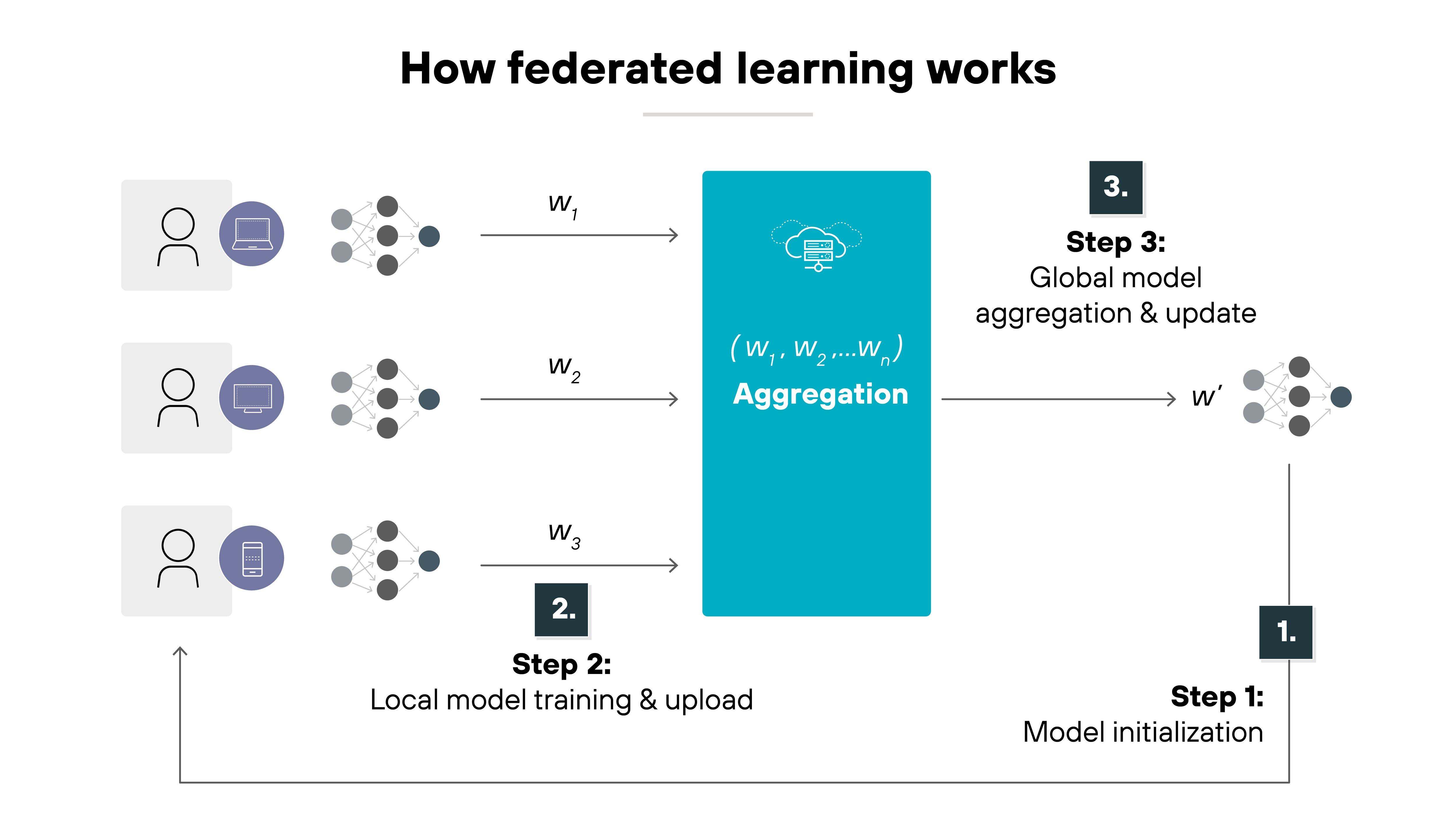

How does federated learning work?

Federated learning uses a simple but powerful cycle. It trains a shared model across many participants—like devices, organizations, or data centers—without ever moving the raw data.

Here's how it works.

-

The process begins with initialization.

A central server creates a global model. It then sends that model to each participating client.

-

Each client performs local training.

It uses its own data to improve the model on its device or system. The data never leaves that environment. Only model updates—such as adjusted weights or gradients—are prepared for sharing.

-

Next comes secure aggregation.

Each client encrypts its updates before sending them to the central server. The server can combine these encrypted updates without viewing individual contributions. Technologies like secure multiparty computation, homomorphic encryption, and differential privacy make this step possible.

-

After aggregation, the server performs a global update.

It averages the clients' contributions and creates a new global model. That improved model is sent back to the clients for another training round. The cycle repeats until the model reaches the desired performance.

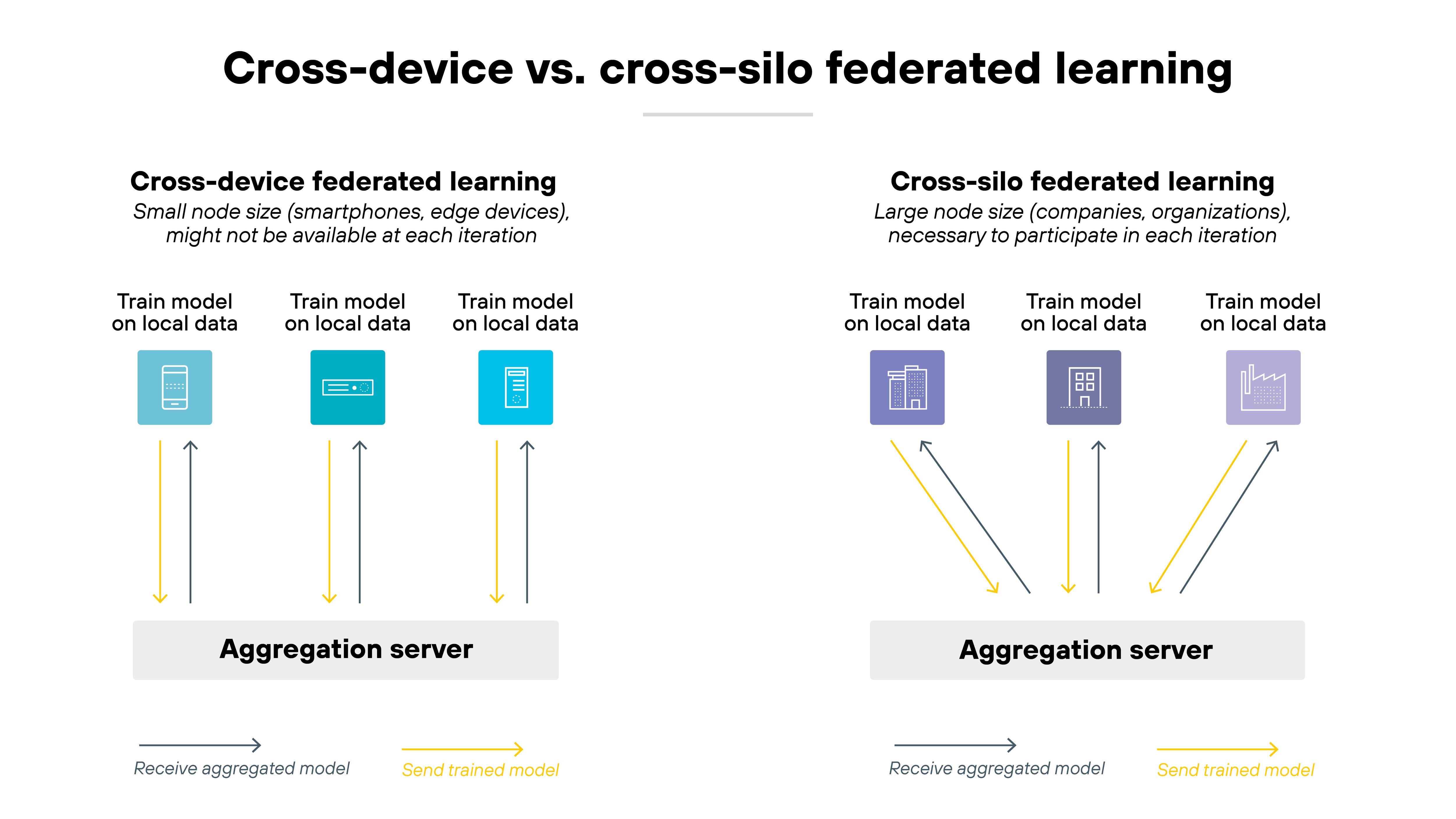

There are two main deployment types:

- Cross-device federated learning involves millions of small devices, such as smartphones, each training briefly when idle.

- Cross-silo federated learning uses fewer but more capable participants—like hospitals or banks—where each represents an entire organization.

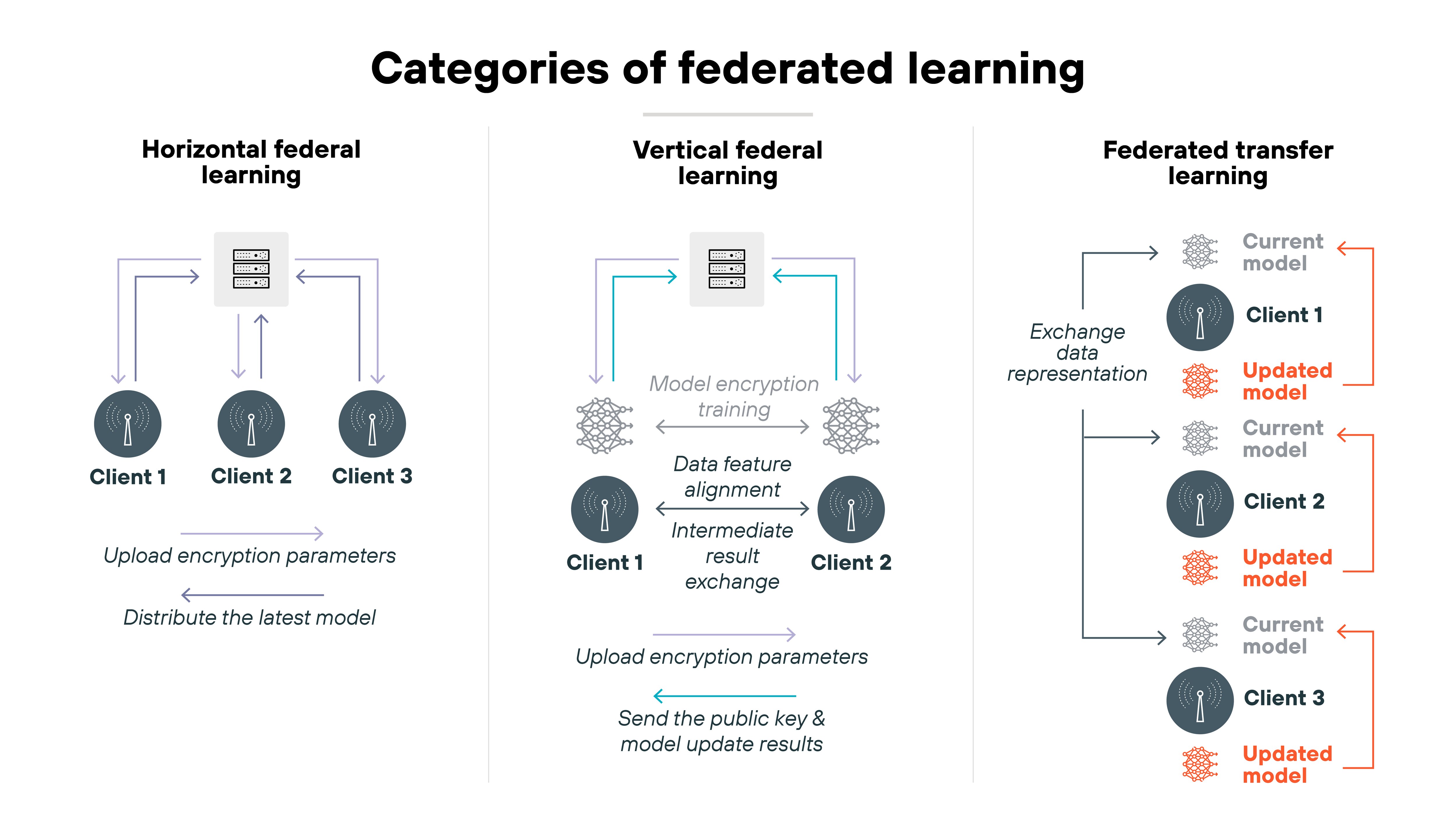

Federated learning also has three key variants:

- Horizontal FL (HFL) combines data from participants with similar features but different users, such as multiple hospitals training on patient records.

- Vertical FL (VFL) merges participants with shared users but different data types. Like a bank and retailer sharing insights about the same customers.

- Federated transfer learning (FTL) connects organizations with little overlap in either users or features.

In simplified form, the process looks like this: clients train locally → encrypted updates → central server aggregates → new global model.

Why is federated learning considered privacy-preserving?

Federated learning is built on a simple idea: keep the data where it is.

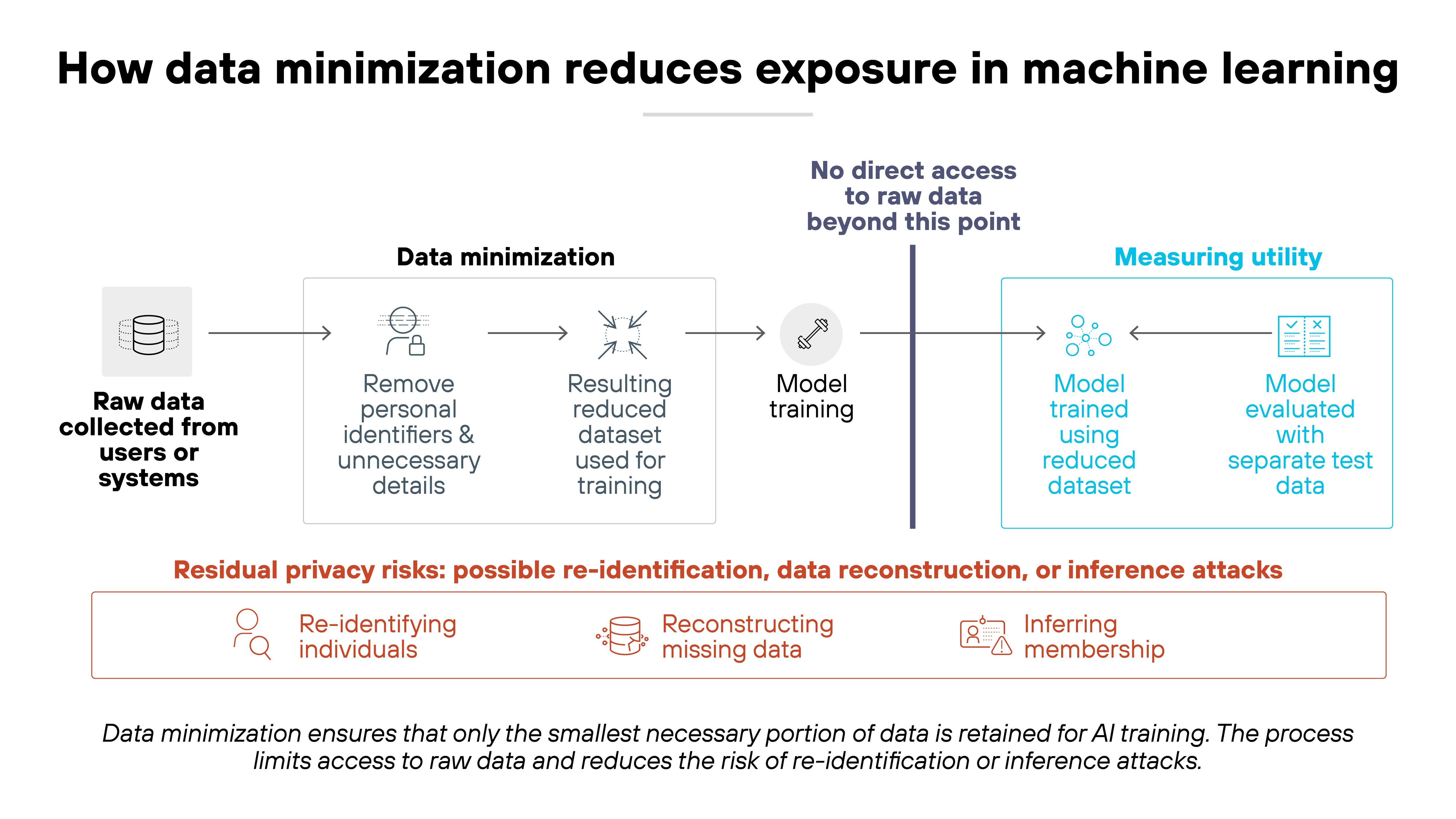

It applies the principle of data minimization. Which means raw data never leaves the devices or servers that create it. Only model updates or learned parameters are shared.

Here's why that matters.

In traditional machine learning, data is pooled in one place for training. That centralization increases exposure risk.

But federated learning removes that single point of failure. Each participant trains locally and contributes only to model improvements. Not data collection.

Now, privacy in this system comes from multiple protective layers.

-

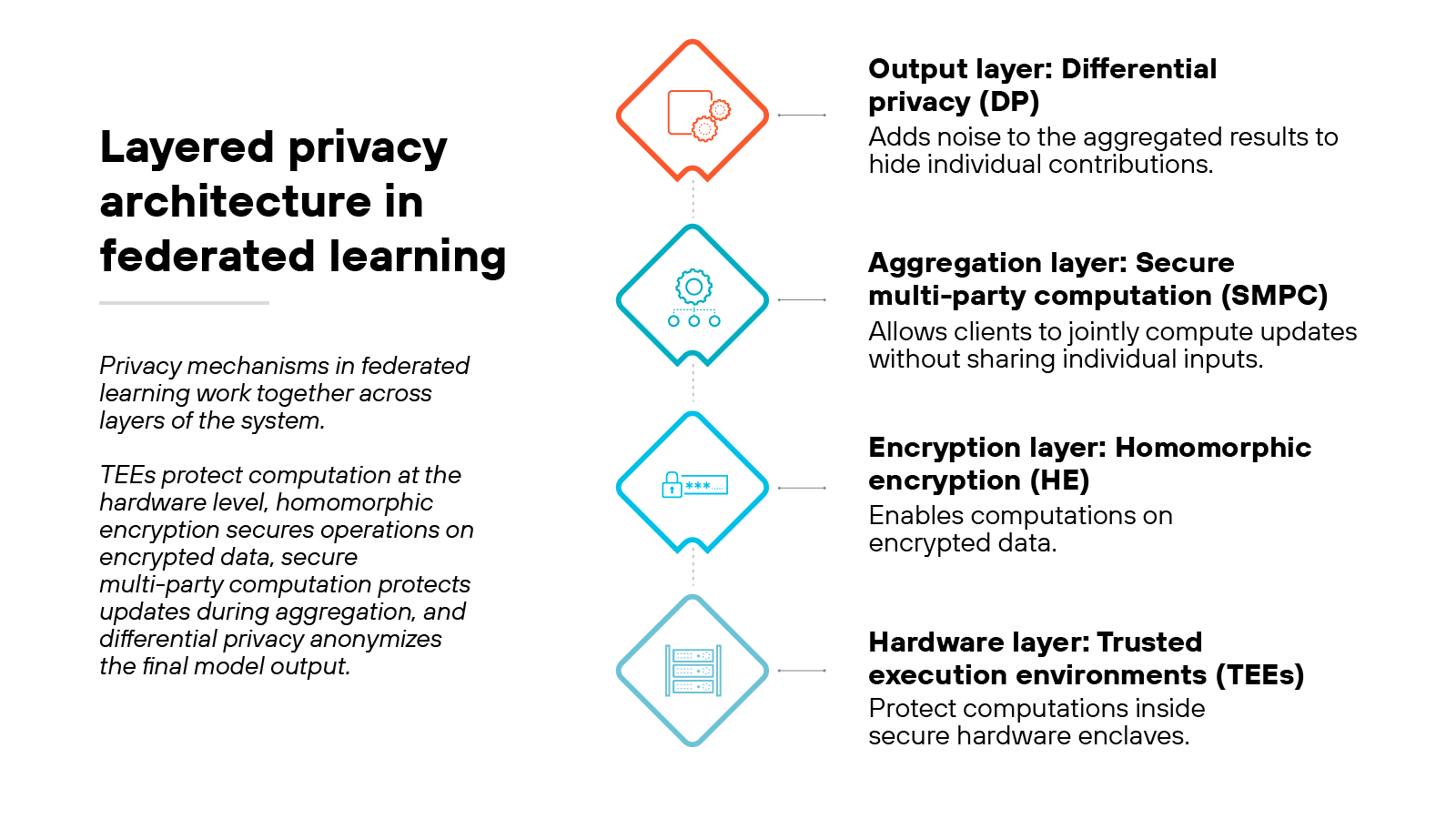

The first is secure multi-party computation (SMPC).

It allows several parties to jointly compute results—like combining model updates—without revealing their individual inputs. No participant can view another's data.

-

The second is homomorphic encryption (HE).

It enables computations on encrypted data. In other words, the system can process updates while they remain unreadable to anyone, even the central server.

-

The third is differential privacy (DP). It introduces small amounts of statistical noise to model updates. That makes it mathematically difficult to trace any update back to a specific user or record.

-

And finally, trusted execution environments (TEEs) provide hardware-level protection.

They isolate sensitive computations within secure enclaves that prevent tampering or unauthorized access.

Together, these methods form a layered defense. Each technique covers gaps the others leave open, providing stronger overall privacy.

What are the main challenges of federated learning?

Federated learning has clear privacy benefits, but it also introduces new technical and operational challenges. These challenges come from how distributed the system is and how much it depends on consistent communication, performance, and trust.

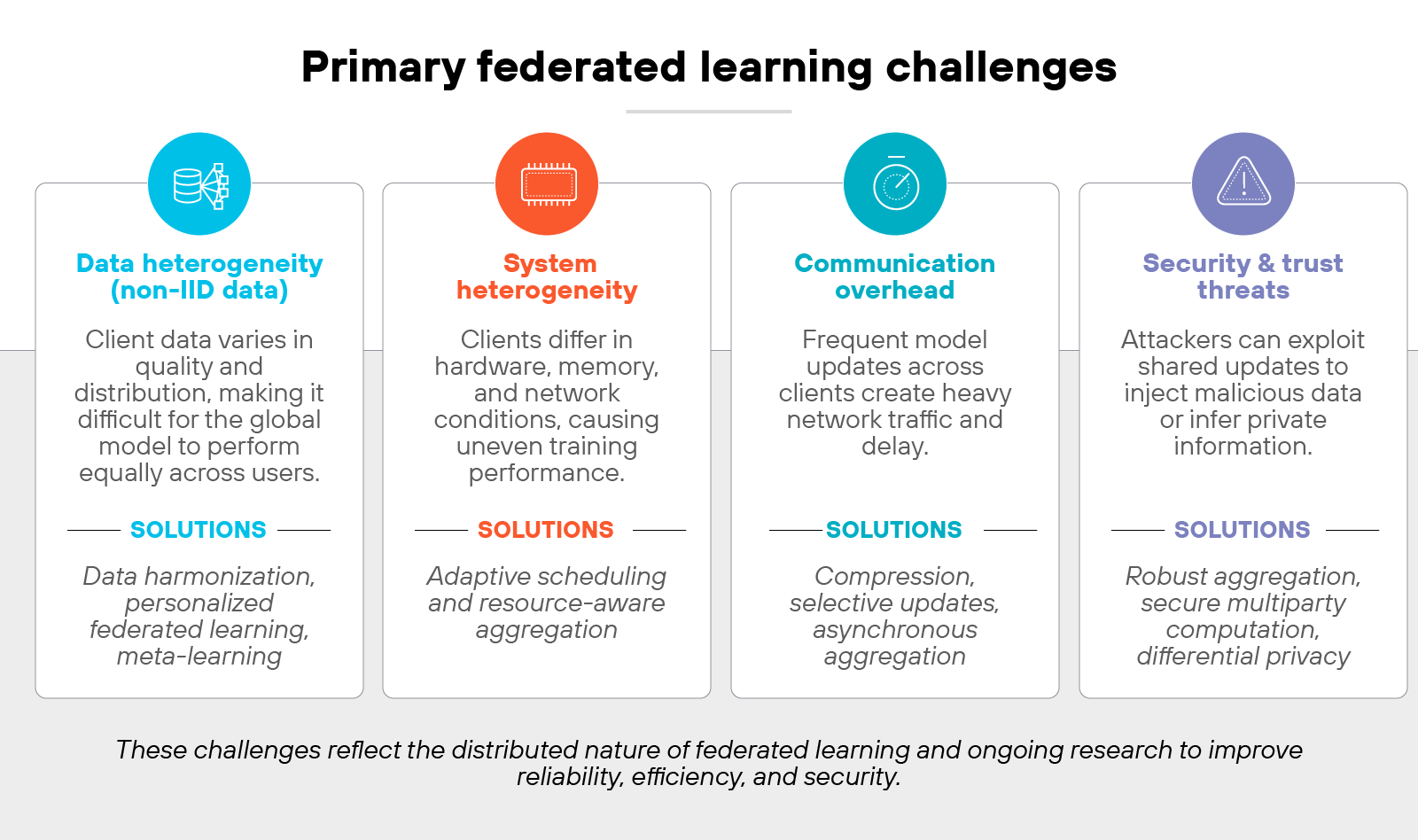

Data heterogeneity (non-IID data)

Each participant's data looks different. Some have large, clean datasets. Others have small, inconsistent, or biased ones. That difference—called non-independent and identically distributed (non-IID) data—makes it harder for a single global model to perform equally well across all users.

Here's why.

When data distributions differ, local model updates may conflict. That slows convergence and reduces overall accuracy.

Researchers are exploring data harmonization, personalized federated learning, and meta-learning to make models adapt better to each client's unique data.

System heterogeneity

Devices in a federated network don't all look the same. Some are high-end servers. Others are low-power edge devices or mobile phones. Which means training speeds, memory, and network conditions vary.

This variation leads to inconsistent participation and dropped connections during training. Adaptive scheduling and resource-aware aggregation techniques now help balance workload and keep models learning efficiently across different environments.

Communication overhead

Federated learning depends on repeated message exchanges between clients and the server. Each training round involves sending and receiving model updates. At scale, that's a lot of network traffic.

Compression, selective update sharing, and asynchronous aggregation methods are reducing the bandwidth burden. But communication efficiency remains a central focus of ongoing research.

Security and trust threats

Even though raw data stays local, federated systems can still be attacked through shared model updates. Malicious clients can inject poisoned data, alter gradients, or exploit patterns to infer private information.

Robust aggregation, secure multiparty computation, and differential privacy help defend against these risks. However, attacks like Byzantine faults and model inversion continue to challenge how securely federated systems can scale.

These security implications—and how they shape AI risk management more broadly—are explored later in this article.

Where is federated learning used today?

Federated learning is no longer experimental. It's now part of how organizations handle sensitive data while still training effective AI models. The technology supports sectors where privacy, regulation, and distributed data make traditional centralized training impractical.

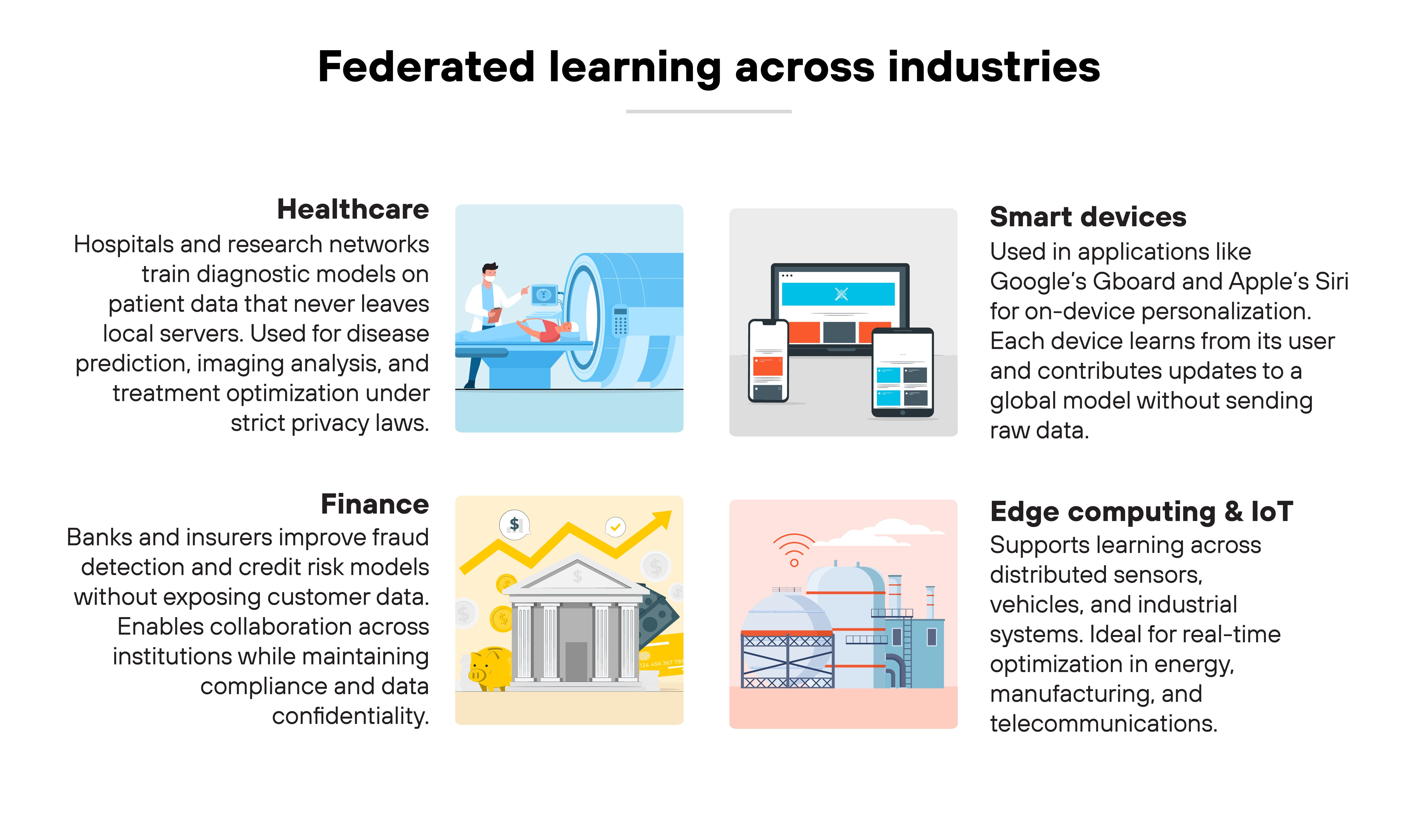

Healthcare

Hospitals and research networks use federated learning to train diagnostic models on patient data that never leaves their servers. That means predicting diseases, improving imaging analysis, or identifying treatment patterns without sharing confidential records.

For example(s), institutions can collaborate on cancer detection or medical imaging projects while maintaining compliance with privacy laws.

Finance

Banks and insurers use federated learning to improve fraud detection and credit risk models. It allows them to learn from transaction data held in different institutions without exposing customer information. This approach reduces regulatory risk while improving model accuracy across diverse datasets.

Smart devices

On-device applications like Google's Gboard and Apple's Siri use federated learning for personalization. Each device learns from its user—improving text prediction or voice recognition—while updates contribute to a shared model.

The data itself never leaves the device.

Edge computing and IoT

Federated learning extends to industrial systems, connected vehicles, and IoT networks. It enables models to learn from distributed sensors and devices where bandwidth is limited and data privacy is critical. That makes it suitable for real-time optimization in sectors like energy, manufacturing, and telecommunications.

Ongoing deployments by companies such as Google, Apple, and Meta—and partnerships with academic consortia—continue to expand how federated learning is applied across industries.

How is federated learning evolving?

Federated learning is entering a more mature phase.

The early goal was simple: keep data local.

Now the focus is expanding. Researchers are building systems that are not only private but also reliable, transparent, and auditable.

Here's where it's heading.

The field is moving toward what's known as trustworthy federated learning.

It combines privacy, robustness, and security into one unified framework. The idea is that a model should protect sensitive data, detect manipulation, and verify the integrity of updates before they're merged into the global model. In other words, it's about establishing measurable trust in how learning happens.

At the same time, federated learning is being connected to large-scale AI.

Researchers are exploring how to train or fine-tune foundation models—including vision and large language models—on distributed datasets. To support that scale, confidential computing is emerging as a key enabler. It provides isolated, verifiable environments for handling sensitive model updates.

The supporting infrastructure is evolving too.

Trusted execution environments (TEEs) now allow training steps to occur inside secure hardware enclaves that can be independently verified. When paired with ledger-based verification, these systems can record which computations occurred, under what privacy guarantees, and by whom. That gives organizations the ability to audit and prove compliance.

Finally, federated learning is branching into new areas such as federated analytics.

Which applies the same privacy-preserving approach to data analysis, and personalized federated learning, which adapts models to individual participants.

It's worth noting, though, that open research questions definitely remain. Scalability, fairness, and verifiability are still being refined as federated learning grows from a privacy technique into a broader framework for secure, distributed AI.

How does federated learning impact AI security?

Federated learning strengthens AI security by changing where and how data is used.

Instead of pooling information in a central repository, it keeps data distributed across many devices or organizations. That shift limits the number of access points attackers can target.

Here's why that matters.

By enforcing data locality, federated learning reduces the risk of large-scale data breaches.

Attackers can't reach an entire dataset by compromising a single server because the data never leaves its source. It also aligns with secure AI design principles like least privilege and defense in depth—each participant only processes what it needs to contribute to the global model.

Plus, federated learning supports confidential computing, where sensitive workloads run inside secure hardware environments.

These systems protect training operations from tampering or interception, even by insiders. Together, these approaches make AI pipelines more resilient to unauthorized access and data exposure.

However:

Privacy doesn't eliminate risk. Federated learning does introduce new attack surfaces through shared model updates.

Malicious participants can inject poisoned data, manipulate gradients, or attempt inference attacks—techniques that reconstruct private information from model behavior. Defending against these threats requires strong cryptography, model validation, and anomaly monitoring.

It also demands trust in the aggregation process itself. Servers that combine updates must be verifiable and governed by strict policy controls to prevent manipulation.

In short: federated learning enhances AI security by decentralizing risk. But it also creates new responsibilities for organizations to secure distributed systems end to end. It's both an advancement and a challenge. And it's central to how privacy-preserving AI ecosystems are being built today.

- How to Secure AI Infrastructure: A Secure by Design Guide

- What Is Generative AI Security? [Explanation/Starter Guide]

- Top GenAI Security Challenges: Risks, Issues, & Solutions