- 1. Why are AI-BOMs necessary?

- 2. What information does an AI-BOM contain?

- 3. How do you build an AI-BOM?

- 4. Where does an AI-BOM fit in the AI lifecycle?

- 5. Who maintains an AI-BOM inside an organization?

- 6. What challenges come with implementing AI-BOMs?

- 7. What standards define AI-BOMs today?

- 8. What is the difference between an SBOM and an AI-BOM?

- 9. AI-BOM FAQs

- Why are AI-BOMs necessary?

- What information does an AI-BOM contain?

- How do you build an AI-BOM?

- Where does an AI-BOM fit in the AI lifecycle?

- Who maintains an AI-BOM inside an organization?

- What challenges come with implementing AI-BOMs?

- What standards define AI-BOMs today?

- What is the difference between an SBOM and an AI-BOM?

- AI-BOM FAQs

What Is an AI-BOM (AI Bill of Materials)? & How to Build It

- Why are AI-BOMs necessary?

- What information does an AI-BOM contain?

- How do you build an AI-BOM?

- Where does an AI-BOM fit in the AI lifecycle?

- Who maintains an AI-BOM inside an organization?

- What challenges come with implementing AI-BOMs?

- What standards define AI-BOMs today?

- What is the difference between an SBOM and an AI-BOM?

- AI-BOM FAQs

An AI-BOM is a machine-readable inventory that lists every dataset, model, and software component used to build and operate an AI system. It's created by collecting this information during model development and structuring it with standards to record versions, sources, and relationships.

Building an AI-BOM enables organizations to trace model lineage, verify data provenance, and meet transparency and compliance requirements.

Why are AI-BOMs necessary?

AI systems are becoming more complex, distributed, and dependent on external data and models.

Unlike traditional software, their behavior depends not just on code but on how they're trained, tuned, and updated over time. Each model may rely on third-party data sources, pre-trained components, or continuous learning pipelines that change automatically.

At the same time, organizations are deploying AI faster than they can govern it.

- 88% of organizations report regular AI use in at least one business function (up from 78% the previous year).

- Half of respondents say their organizations are using AI in three or more business functions.

- Nearly 50% of large companies (>$5B in revenue) have scaled AI, compared with just 29% of small companies (<$100M).

That lack of traceability makes it difficult to verify security, fairness, and compliance at scale.

That creates a new kind of supply chain. One made up of datasets, models, and algorithms rather than static code.

Traditional software bills of materials (SBOMs) were never designed for that level of uncertainty. They track software dependencies but not the datasets, model weights, or retraining processes that shape AI behavior over time.

That's where the gap appeared.

As AI systems scaled and adopted third-party components, new risks became apparent, like data poisoning, model tampering, and unverified third-party components.

Each introduces uncertainty into how an AI system learns and behaves. And without a transparent record of those elements, it's nearly impossible to trace or validate what's running in production.

Regulators have noticed.

Recent policy efforts now require documentation of model sources, data provenance, and performance testing. Security authorities have published AI‑specific SBOM use cases to address these blind spots.

Basically, AI-BOMs are necessary today because AI isn't static software. It's a living system that evolves, retrains, and depends on data you don't always control.

A structured, machine‑readable bill of materials is a reliable way to keep that ecosystem accountable.

- Top GenAI Security Challenges: Risks, Issues, & Solutions

- What Is Data Poisoning? [Examples & Prevention]

FREE AI RISK ASSESSMENT

Get a complimentary vulnerability assessment of your AI ecosystem.

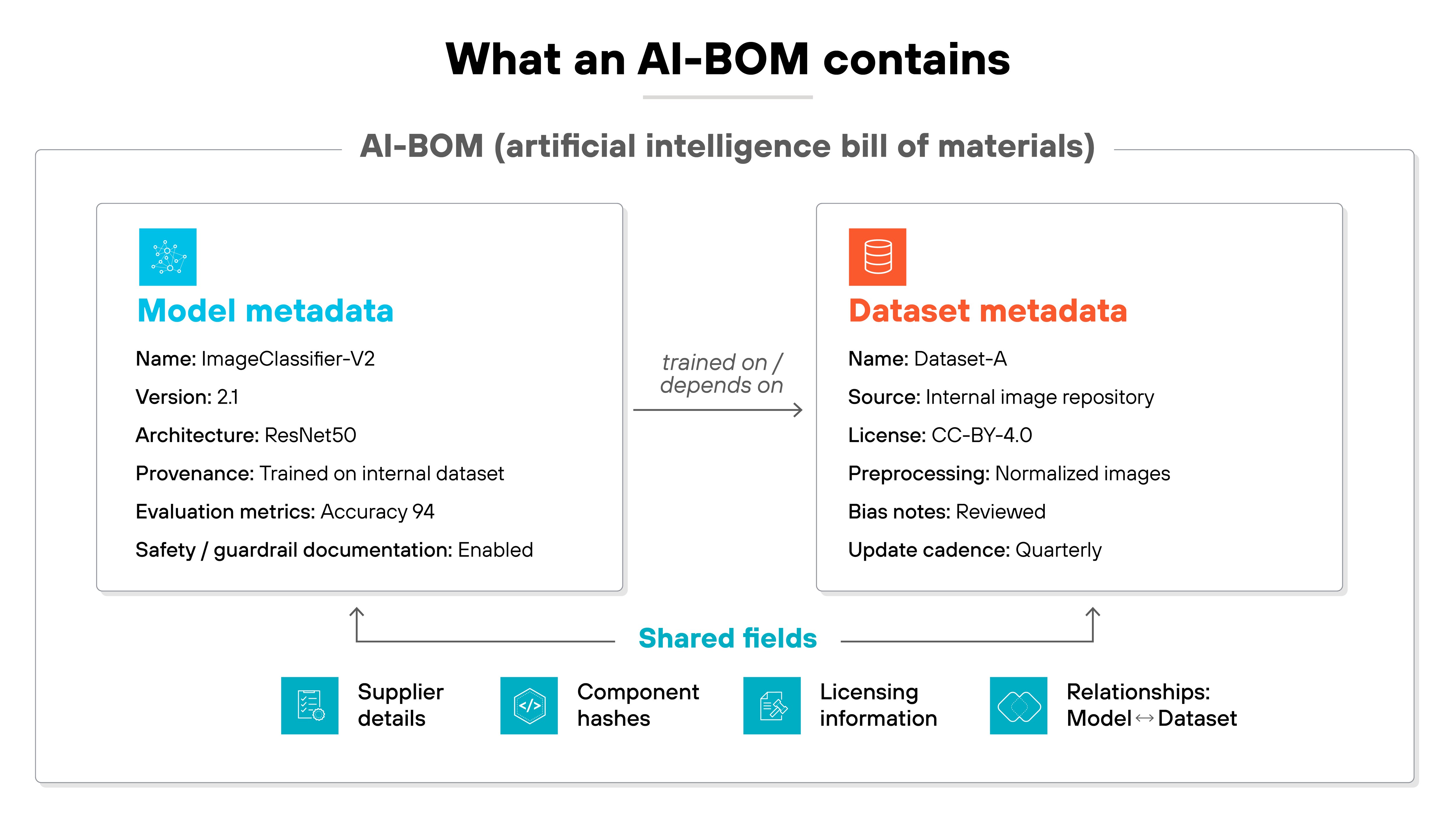

Claim assessmentWhat information does an AI-BOM contain?

An AI-BOM is built around structured, machine-readable fields.

Each field describes a specific part of the AI system, from the model itself to the data that trained it and the relationships that tie them together. These fields follow a defined schema so they can be automated, validated, and compared across systems.

Model metadata

Model metadata describes the AI component itself. It includes the model's name, version, architecture, and provenance details. These fields document how and when a model was trained, along with the tools or configurations that shaped it.

Performance metrics such as accuracy or precision are also included. They help users understand what the model was evaluated against and how it performed.

Ethical or safety notes can be added to flag known limitations or constraints. For generative AI (GenAI) models, this includes documentation of model safety layers, guardrails, and any specific hallucination mitigation strategies deployed.

- What Are AI Hallucinations? [+ Protection Tips]

- What Is AI Bias? Causes, Types, & Real-World Impacts

Dataset metadata

Dataset metadata focuses on the data used to train or fine-tune the model. It lists the dataset's source, licensing, and preprocessing steps that shaped the data before training.

These fields provide context on data quality and representativeness. They also record bias annotations or known limitations so organizations can monitor fairness. For models trained on vast or proprietary data, documentation must also address data privacy, memorization risks, and content filtering steps used prior to training.

The update cadence field indicates how often datasets change. Which is a critical factor for retraining and drift detection.

Shared fields

Shared fields link everything together. They include supplier details, component hashes for integrity, and licensing information. Relationship fields define how each model and dataset connect, forming the backbone of traceability within the AI-BOM.

Example

{

"Model": {

"name": "ImageClassifier-V2",

"version": "2.1",

"architecture": "ResNet50",

"provenance": "Trained on internal dataset",

"dataset": "Dataset-A",

"evaluation_metrics": { "accuracy": "94%" }

},

"Dataset": {

"name": "Dataset-A",

"source": "Internal image repository",

"license": "CC-BY-4.0",

"update_cadence": "Quarterly"

}

}

How do you build an AI-BOM?

Building an AI-BOM is a structured, standards-driven process. It's methodical by design because consistency is what makes the record useful.

Each step helps capture the details that define how your models and datasets are built, verified, and maintained.

Think of it less as paperwork and more as infrastructure. The goal is to make transparency part of how your AI systems operate. Not an afterthought.

When done right, the AI-BOM becomes a living source of truth that updates as your models evolve.

Let's walk through what that process looks like in practice.

Step 1: Define scope and ownership

Start by deciding which AI systems to include.

Not every experiment or prototype needs full documentation, but production and externally sourced models do. Scope determines depth.

Then assign ownership.

For example: MLOps teams manage automation, data scientists provide model lineage, and compliance teams maintain oversight. Without clear ownership, the AI-BOM quickly falls out of sync with reality.

Step 2: Select SPDX 3.0 AI and Dataset profiles

SPDX 3.0.1 is the technical backbone for AI-BOMs. It defines how to describe models, datasets, and their dependencies in a consistent, machine-readable way.

The AI and Dataset Profiles capture what matters most—architecture, data sources, licensing, and provenance—and standardize how relationships like trained on or tested on are represented.

Using these profiles means every AI-BOM can interoperate across tools and teams.

Step 3: Automate extraction in ML pipelines

Manual updates don't scale. Embed metadata collection into your training and deployment pipelines instead.

Automation captures model parameters, dataset sources, and environment details as they change. Which means every new model version or retraining cycle automatically updates the AI-BOM without human intervention or stale records.

Step 4: Validate via SPDX schemas and ontology

Once data is collected, it needs to be checked.

SPDX provides JSON-LD schemas and an ontology that define how an AI-BOM should be structured.

Run validations to confirm fields are complete and relationships make sense. Validation isn't busywork. It prevents mismatches that can break traceability or compromise audit readiness.

Step 5: Version and sign for integrity

Each AI-BOM should be versioned alongside the model it describes. That keeps history intact when models retrain or change ownership.

Add a cryptographic signature or hash to verify authenticity. This step enforces integrity and ensures version history remains tamper-proof.

Step 6: Integrate with compliance and monitoring workflows

An AI-BOM delivers value only when it connects to the systems that use it. Link it with vulnerability management, model monitoring, and compliance reporting tools.

That integration lets teams flag outdated models, track retraining events, and automatically prove adherence to regulatory and internal governance requirements.

Step 7: Store centrally for audit or incident response

Centralize storage in a version-controlled repository. It becomes the single source of truth for audits, security investigations, and cross-team collaboration.

When an incident occurs, teams can immediately trace which model or dataset was involved. And respond with confidence instead of guesswork.

- How to Secure AI Infrastructure: A Secure by Design Guide

- How to Build a Generative AI Security Policy

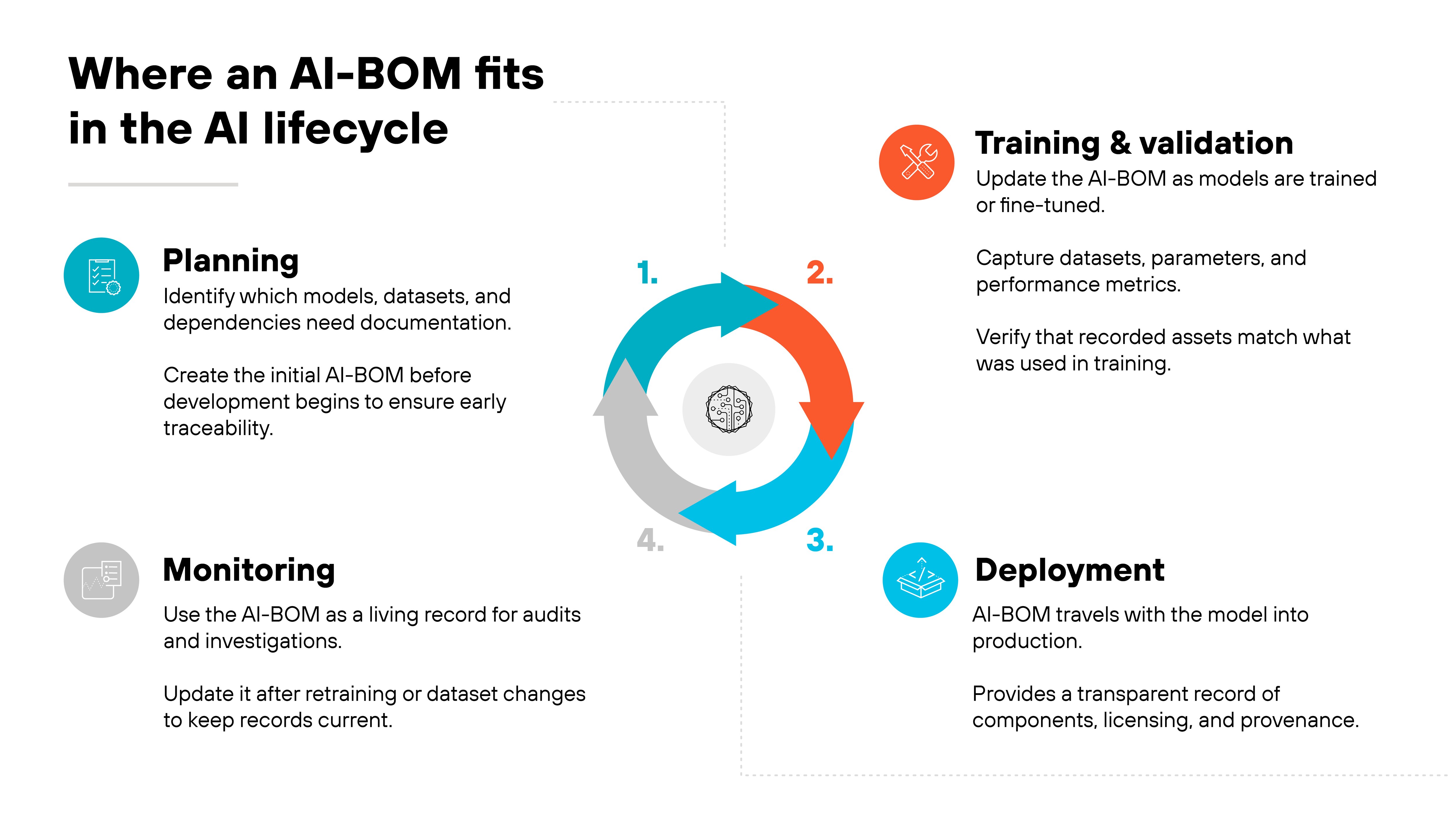

Where does an AI-BOM fit in the AI lifecycle?

An AI-BOM isn't created once and forgotten. It's woven through every phase of the AI lifecycle, from planning and training to deployment and monitoring.

-

It begins in the planning stage.

That's when teams identify which models, datasets, and dependencies need to be documented. Early generation ensures traceability before code or data pipelines even take shape.

-

Next comes training and validation.

Each time a model is trained or fine-tuned, the AI-BOM is updated to reflect new parameters, datasets, and performance results. Validation stages confirm that documented assets match what was actually used.

-

During deployment, the AI-BOM travels with the model.

It provides a transparent record of components, licensing, and provenance, which is essential for compliance and operational oversight.

-

Finally, in monitoring, the AI-BOM acts as a living record.

It's referenced during audits, updated after retraining, and used to trace back any issues that arise in production.

The AI-BOM runs parallel to the AI lifecycle. It evolves continuously, keeping documentation aligned with how models and data change over time.

Who maintains an AI-BOM inside an organization?

Maintaining an AI-BOM isn't a one-team job. It requires coordination across technical, security, and governance functions to keep the record accurate as systems evolve.

Responsibility is shared:

- Data science teams update details about models and datasets.

- MLOps teams manage the automation that generates and stores AI-BOM data.

- Product security teams verify integrity and ensure alignment with secure development practices.

- Compliance teams review updates for documentation and audit readiness.

So each group owns part of the process.

However, there should be one accountable owner.

In most organizations, that role sits with an AI security or ML governance lead. This person oversees lifecycle management, approves schema or field changes, and ensures updates follow established policy.

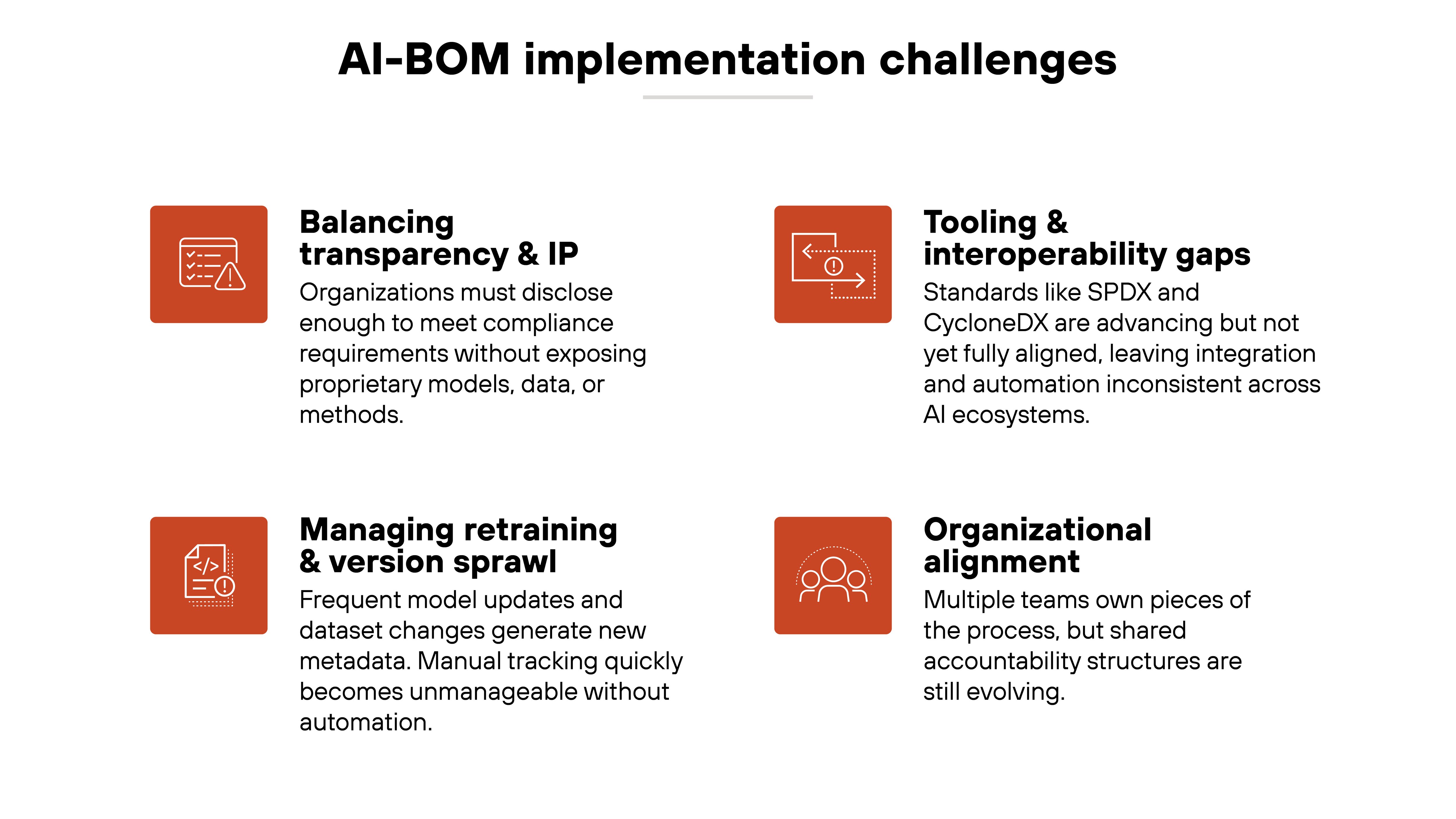

What challenges come with implementing AI-BOMs?

AI-BOM adoption is still developing, and real-world implementation brings its own set of challenges. The concept is proven. The tooling and operational maturity are still catching up.

Balancing transparency with intellectual property remains the most sensitive issue. Organizations want to disclose enough to prove compliance without revealing proprietary models or training methods. Finding that balance is still more art than automation.

Managing continuous retraining and version sprawl is another. Each model update or dataset change creates new metadata, and manual upkeep quickly becomes unmanageable without automation.

Tooling maturity and interoperability are also evolving. Standards like SPDX and CycloneDX are converging, but they're not yet seamlessly compatible across AI ecosystems.

Finally, organizational alignment can slow progress. Data science, compliance, and security teams all play a role in maintaining the AI-BOM. But shared accountability and ownership models are still emerging.

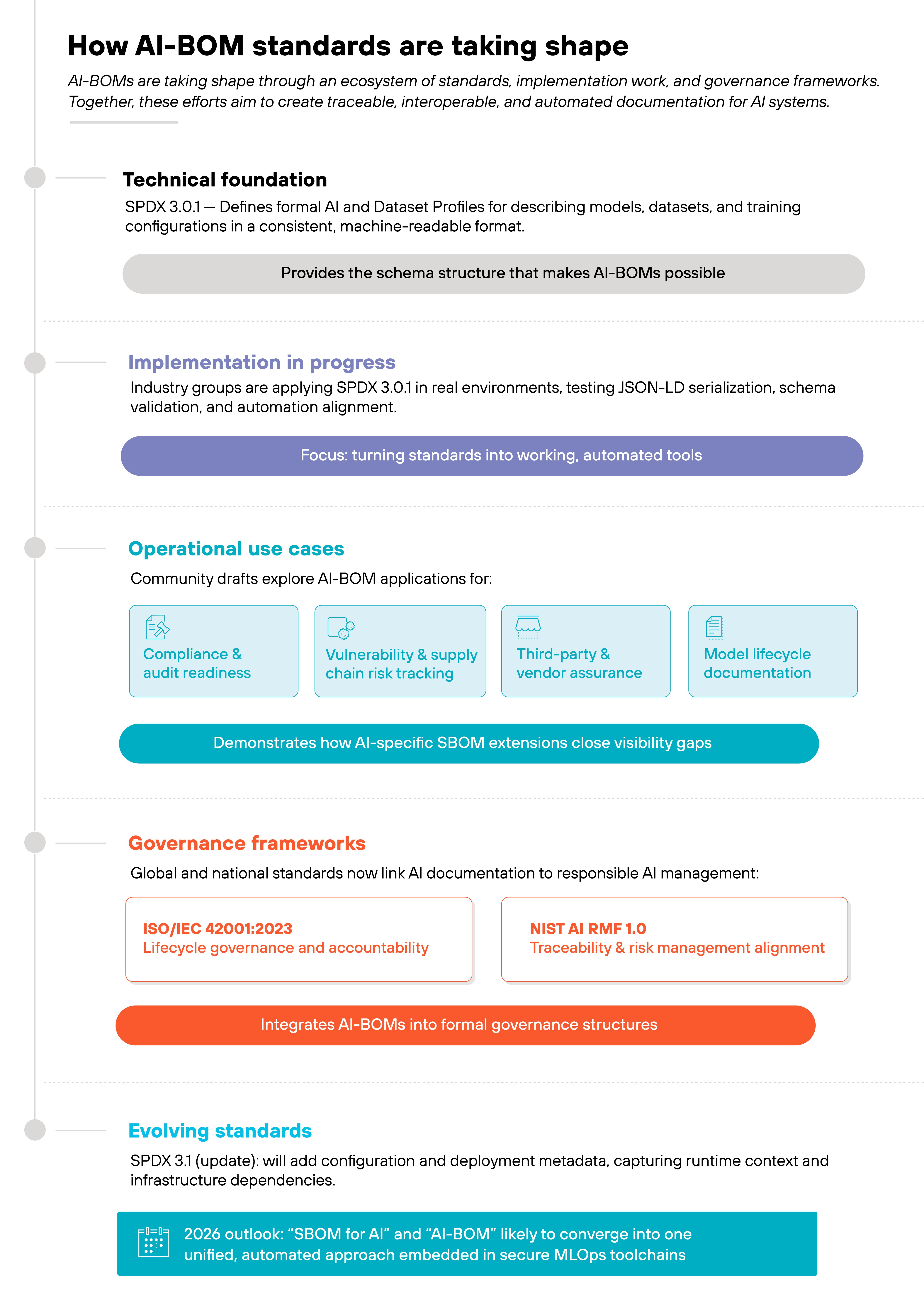

What standards define AI-BOMs today?

AI-BOMs are now being defined through a combination of technical standards, implementation work, and governance frameworks.

Each contributes something different, from schema design to risk alignment.

The foundation is the Software Package Data Exchange (SPDX) 3.0.1 specification, which introduced formal AI and Dataset profiles.

These profiles extend the traditional SBOM model to describe machine learning components—models, datasets, training configurations, and provenance data—in a consistent, machine-readable format.

SPDX provides the technical structure that makes an AI-BOM possible.

Beyond the standard itself, implementation work is already underway. Industry groups have published guidance on applying SPDX 3.0.1 in real AI environments.

This includes examples of JSON-LD serialization, schema validation, and best practices for aligning AI-BOM data with automation pipelines.

Operationally, community drafts are testing how AI-BOMs work in practice. Current use cases focus on compliance, vulnerability response, third-party risk management, and model lifecycle tracking.

They demonstrate how an AI-specific extension of an SBOM can close visibility gaps unique to machine learning systems.

Governance ties it all together. Recent international standards now reference AI asset documentation as part of responsible AI management.

Frameworks such as ISO/IEC 42001:2023 and the NIST AI Risk Management Framework 1.0 both emphasize traceability, accountability, and lifecycle control.

And finally, research validation. Peer-reviewed publications have documented the process of evolving SBOMs into AI-BOMs.

The goal is to ensure the standard is practical, interoperable, and ready for broad adoption.

Research validation continues to shape these standards, ensuring they remain practical and interoperable.

Work is underway in the SPDX community to extend coverage for configuration and deployment metadata. Future releases are expected to expand into runtime and infrastructure details.

Today, the terms SBOM for AI and AI-BOM are used alongside each other, but their usage is gradually aligning as guidance and standards mature. Over time, the focus is likely to shift from defining the schema to automating it within secure MLOps toolchains.

UNIT 42 AI SECURITY ASSESSMENT FOR GENAI PROTECTION

Learn how Unit 42 experts can help you create a threat-informed strategy for data, models, and applications

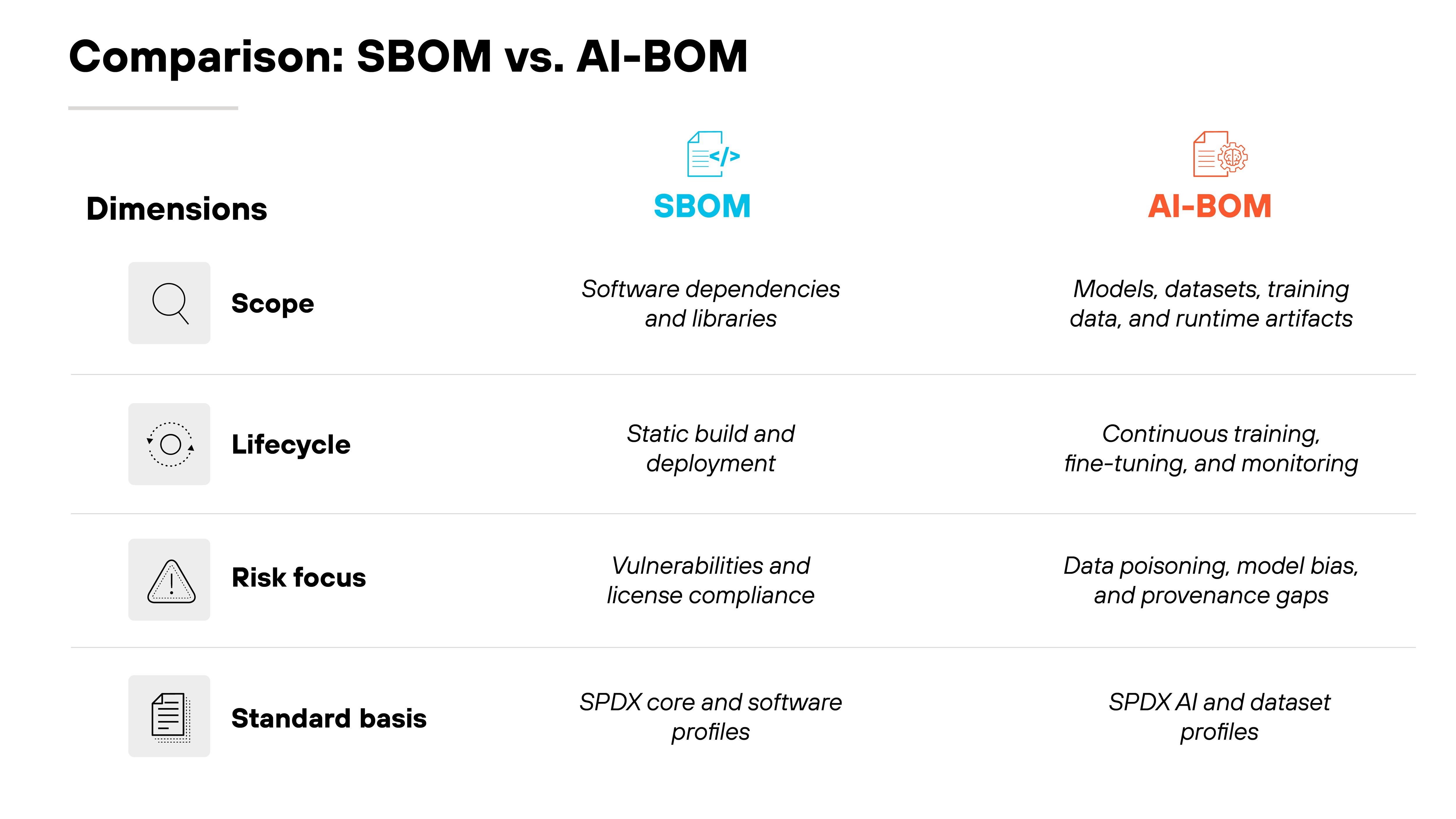

More infoWhat is the difference between an SBOM and an AI-BOM?

AI-BOMs build on the same foundation as SBOMs but were created to address the unique dependencies and lifecycle of modern AI systems.

Here's the difference between the two:

- Traditional SBOMs track static software components. They describe what goes into an application at a single point in time.

- AI-BOMs expand that idea to include everything that shapes how an AI system behaves: datasets, model architectures, training pipelines, and continuous retraining.

Both use the same underlying tooling. The distinction lies in scope and schema. SBOMs capture code. AI-BOMs capture the data, model, and dependency context that define how AI systems are built and maintained.