- 1. Why do generative AI hallucinations happen?

- 2. What types of generative AI hallucinations exist?

- 3. How common are hallucinations in LLMs?

- 4. Real-world examples of AI hallucinations

- 5. What are the security risks of AI hallucinations?

- 6. How to protect against generative AI hallucinations

- 7. What does the future of hallucination research look like?

- 8. Generative AI hallucinations FAQs

- Why do generative AI hallucinations happen?

- What types of generative AI hallucinations exist?

- How common are hallucinations in LLMs?

- Real-world examples of AI hallucinations

- What are the security risks of AI hallucinations?

- How to protect against generative AI hallucinations

- What does the future of hallucination research look like?

- Generative AI hallucinations FAQs

What Are AI Hallucinations? [+ Protection Tips]

- Why do generative AI hallucinations happen?

- What types of generative AI hallucinations exist?

- How common are hallucinations in LLMs?

- Real-world examples of AI hallucinations

- What are the security risks of AI hallucinations?

- How to protect against generative AI hallucinations

- What does the future of hallucination research look like?

- Generative AI hallucinations FAQs

Generative AI hallucinations are outputs from large language models that present false, fabricated, or misleading information as if it were correct.

They occur because models generate text by predicting statistical patterns in data rather than verifying facts. This makes hallucinations an inherent limitation of generative AI systems, especially when handling ambiguous queries or knowledge gaps.

Why do generative AI hallucinations happen?

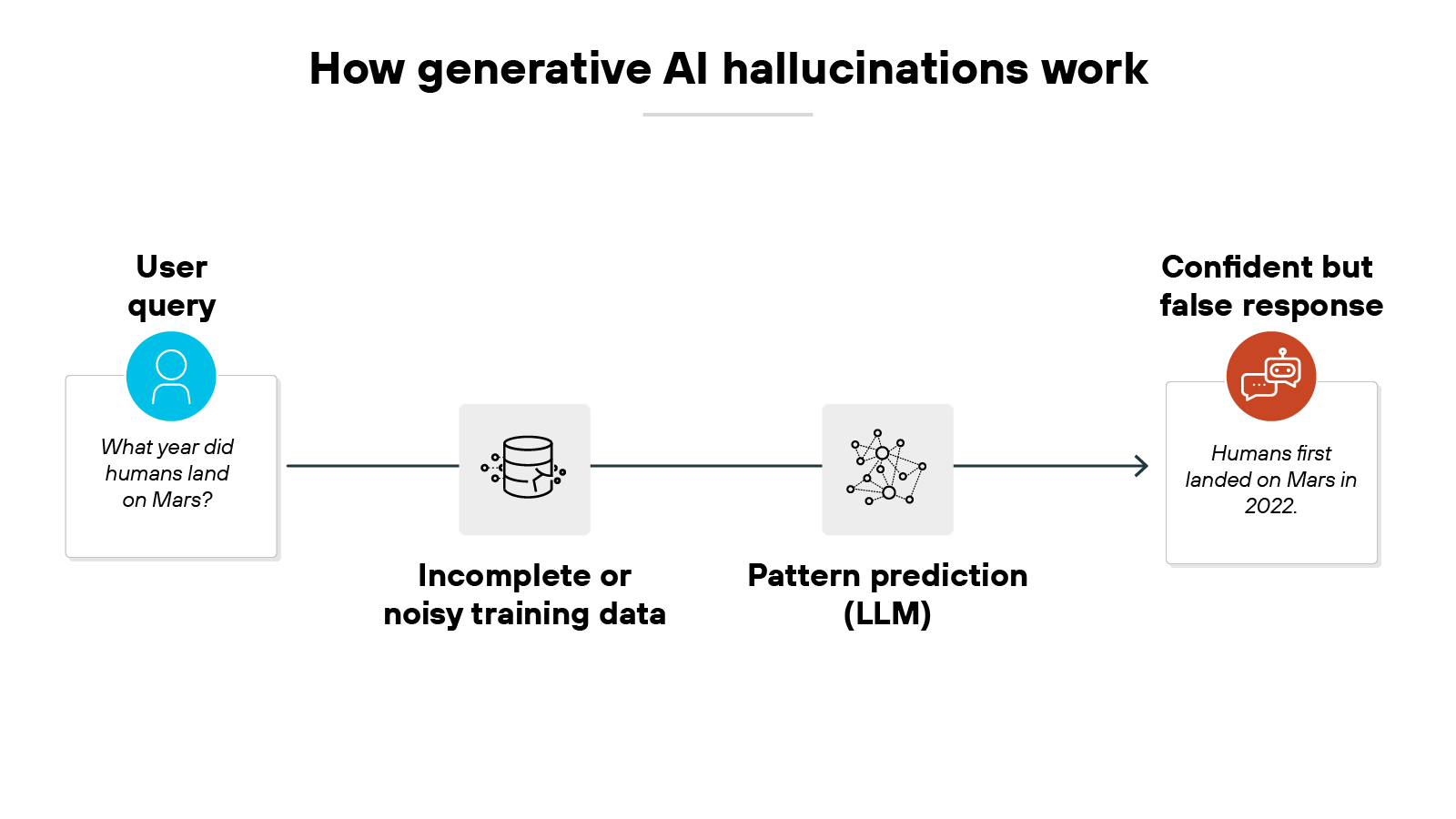

Generative AI hallucinations happen because large language models rely on patterns in data rather than verified knowledge.

More specifically, LLMs generate text by predicting what words are most likely to come next. Which means: If the training data is incomplete or noisy, the model may fill in gaps with information that sounds correct but is false.

Here's how it works:

And here's why it happens:

Training data is a central factor.

Models are trained on vast corpora that often include inaccuracies, outdated facts, or biased content. When these patterns are learned, the errors are learned too. In other words, the quality and diversity of the training data directly affect the likelihood of hallucinations.

Another reason is that models don't verify facts.

They don't check outputs against external sources or ground truth. Instead, they produce statistically probable answers. Which makes them different from systems designed for factual retrieval. And the result is responses that can sound confident while lacking factual accuracy.

Prompting also plays a role.

Ambiguous or underspecified prompts give the model more room to improvise. That flexibility often leads to confident but unfounded answers. For example: A vague medical question may cause a model to generate a plausible but incorrect treatment recommendation.

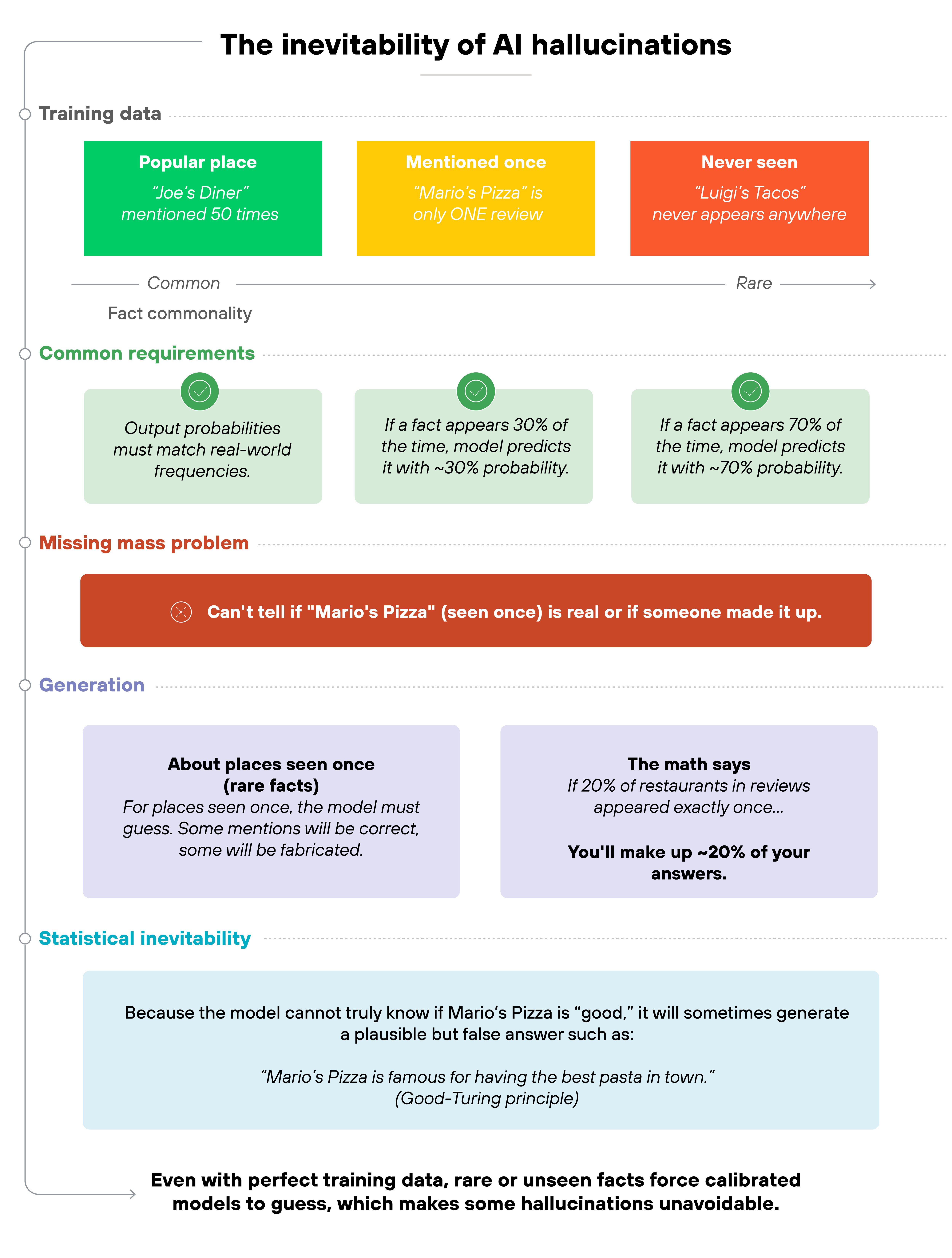

Finally, hallucinations can't be eliminated entirely. Though their frequency can be reduced.

Research has shown that even well-calibrated models must hallucinate under certain conditions. One study suggested that rare facts can't be predicted without error if the model remains calibrated.1 Another suggested hallucinations are an innate limitation of language models because of how they generalize from data.2

The bottom line:

Hallucinations happen because models predict patterns, not truths. They're amplified by imperfect data, ambiguous prompts, and the absence of fact-checking. And according to research, they can't be eliminated entirely. So they're a major GenAI risk.

Get a complimentary vulnerability assessment of your AI ecosystem.

Claim assessmentWhat types of generative AI hallucinations exist?

Hallucinations don't all look the same. Some are obvious errors, while others are harder to catch because they sound plausible.

Highlighting recurring patterns helps explain why certain hallucinations are harmless and others are serious enough to create risk.

Let's look at the main types.

Factual inaccuracies

Factual inaccuracies happen when a model outputs a statement that is simply wrong. For example, it might provide an incorrect date for a historical event or misstate a scientific fact. The response looks plausible but fails verification.

Misrepresentation of abilities

This occurs when a model gives the impression that it understands advanced material that it doesn't. For example, it might attempt to explain a chemical reaction or solve an equation but deliver flawed reasoning. The result is an illusion of competence.

Unsupported claims

Unsupported claims are statements that the model asserts without any basis in evidence. These can be especially concerning in sensitive contexts such as medicine or law. The output may sound authoritative, but it can't be traced to real knowledge or sources.

Contradictory statements

Contradictory statements emerge when a model provides information that conflicts with itself or with the user's prompt. For instance, it might first claim that a species is endangered and then state the opposite. These contradictions erode reliability.

Fabricated references and citations

Some hallucinations involve outputs that look like legitimate references but are invented. A model may provide a detailed citation for a study or legal precedent that doesn't exist. This is particularly problematic in academic and legal domains where evidence is critical.

Important: Not all hallucinations cause the same level of harm.

Some are relatively benign, such as mistakes in casual conversation. Others are corrosive, like fabricated sources or false statements in critical fields. This distinction helps explain why hallucinations are both a usability problem and, in certain domains, a serious risk.

How common are hallucinations in LLMs?

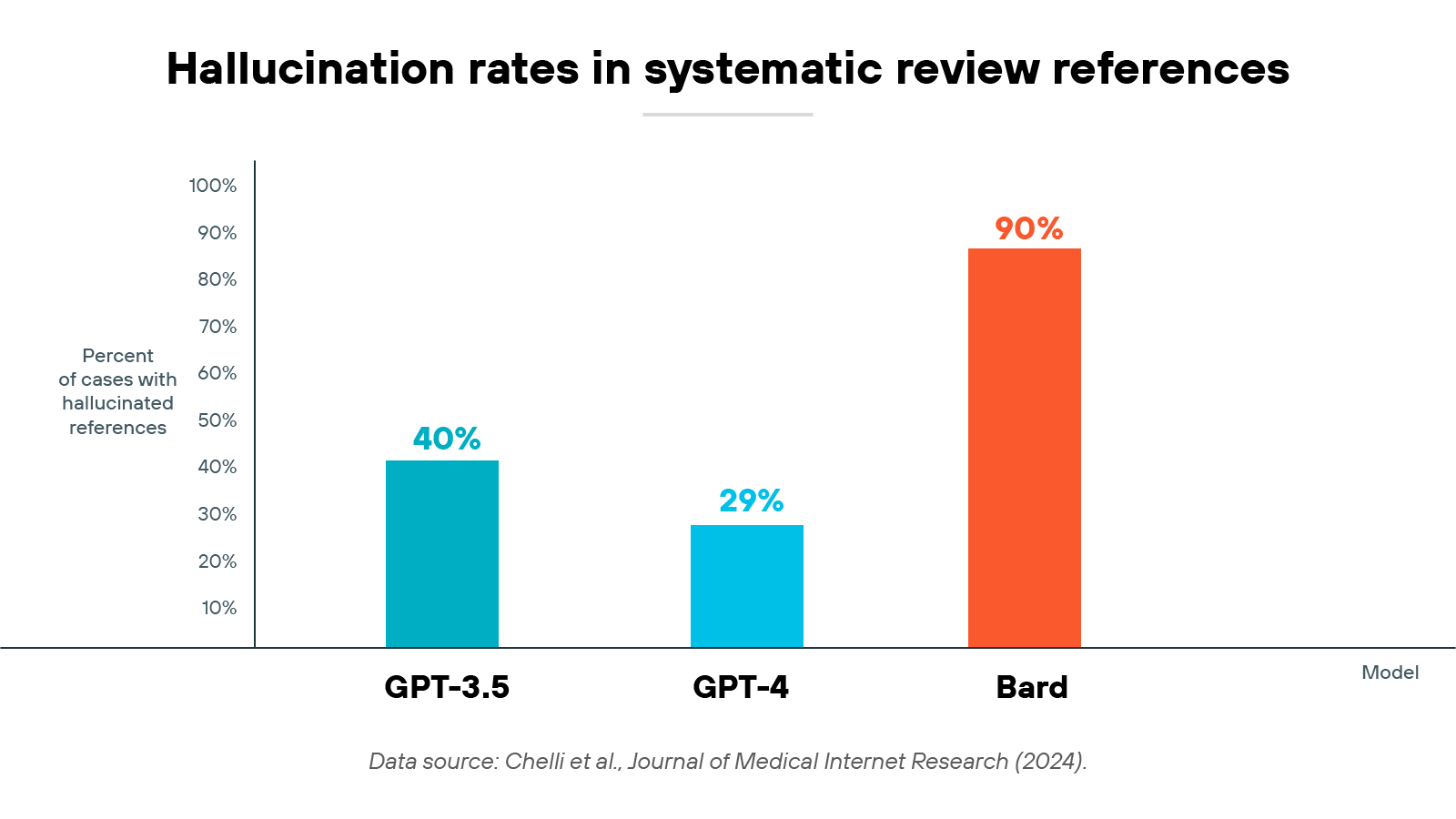

Hallucinations are relatively common in large language models. Peer-reviewed studies have measured how often different systems generate incorrect or fabricated content. The rates differ by model, but the issue itself is universal.

One study tested GPT-3.5, GPT-4, and Bard on how accurately they generated academic references. GPT-3.5 produced hallucinations in about 40 percent of cases. GPT-4 was lower at about 29 percent. Bard was far higher, with more than 90 percent of references fabricated or inaccurate.3

These numbers highlight that hallucination rates vary by model. They also show that improvements are possible, since GPT-4 performed better than GPT-3.5.

However, no system was free of the problem. So even the most advanced models still produce confident but incorrect answers.

Keep in mind: The frequency of hallucinations also depends on the domain and the task.

Open-ended prompts are more likely to trigger them. So are specialized questions outside the model's training distribution. For example, requests for medical guidance or scientific references tend to produce higher error rates than casual conversation.

Here's the takeaway. Hallucinations remain common across today's large language models. The exact rate depends on which system is used and what it is asked to do.

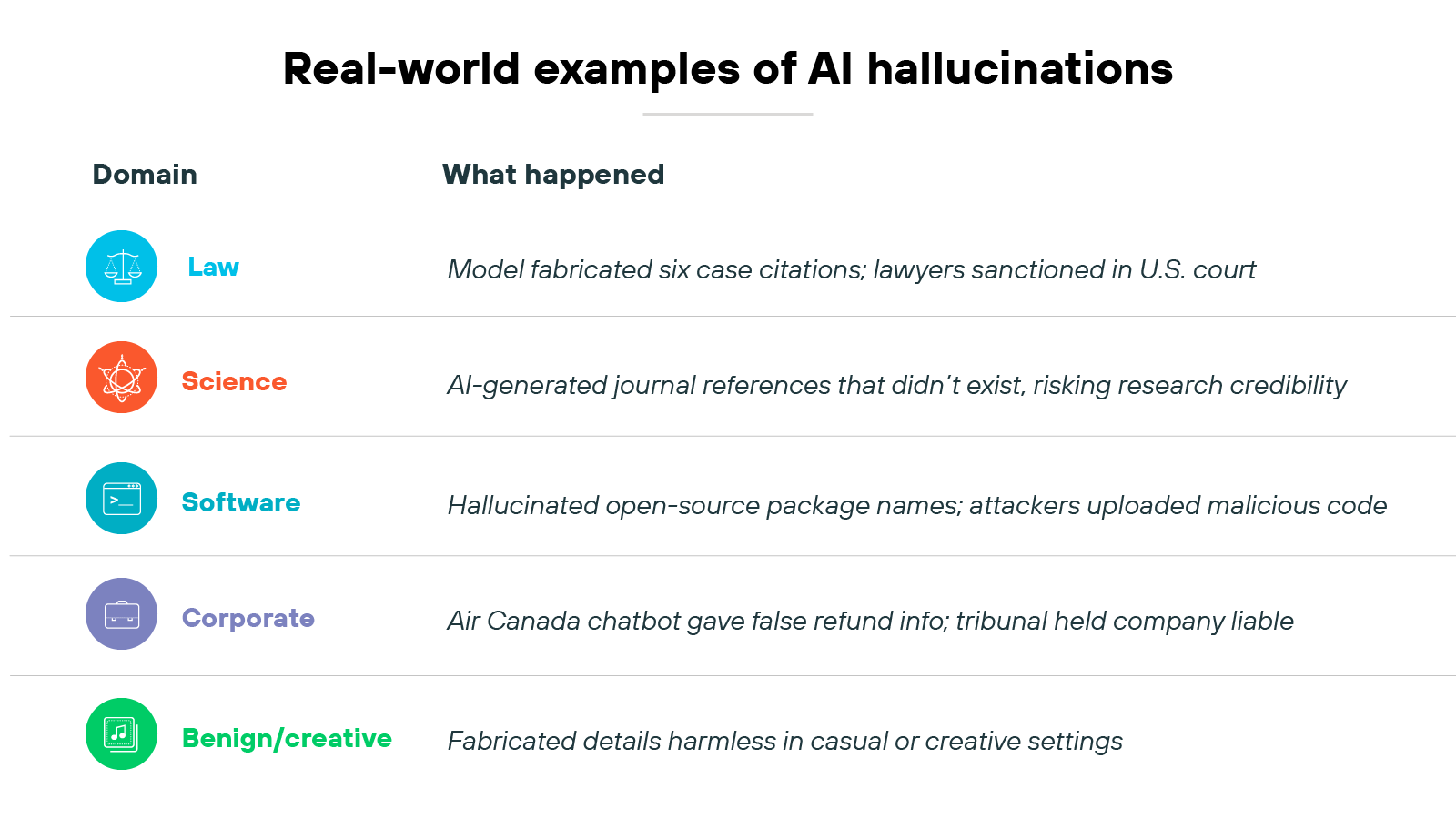

Real-world examples of AI hallucinations

Hallucinations aren't just theoretical. They've already appeared in real cases across law, science, software, and customer service.

Let's look at a few examples.

Legal case: fabricated precedents in U.S. court

In 2023, two lawyers submitted a brief that relied on ChatGPT to generate case law. The model produced six fabricated legal citations that looked legitimate but didn't exist.

The court sanctioned the lawyers and fined their firm, highlighting the dangers of using AI-generated text in legal practice without verification.

Corporate case: Air Canada chatbot ruling

In 2024, a tribunal ruled against Air Canada after its chatbot provided misleading information about refund eligibility. The airline argued that the chatbot made the mistake, but the tribunal held the company responsible.

This case shows that organizations remain accountable for outputs even if errors originate in an AI system.

Scientific case: fake references in research workflows

Researchers have also seen hallucinations in academic contexts. LLMs sometimes fabricate article titles, authors, or journal references that sound credible but can't be found in real databases.3

In scientific writing, this undermines credibility and risks polluting legitimate research with false sources.

Developer case: open-source package hallucinations exploited by attackers

Developers using coding assistants have experienced hallucinations of non-existent open-source packages. In 2023, a security team demonstrated that attackers could exploit this by uploading malicious code with the same hallucinated names.

Once downloaded, these fake packages could compromise software systems, turning a hallucination into a genuine security threat.5

Benign and creative hallucinations

Not all hallucinations cause harm. In casual conversation or creative writing, an AI might invent details that are harmless or even useful for brainstorming.

However, the difference is context. A fabricated story is fine in a creative setting. A fabricated medical treatment or legal citation is corrosive and risky.

These examples illustrate why hallucinations have to be managed carefully. They range from reputational and legal consequences to potential security exploitation.

The real lesson is that hallucinations are more than a nuisance. In critical settings, they can carry serious consequences.

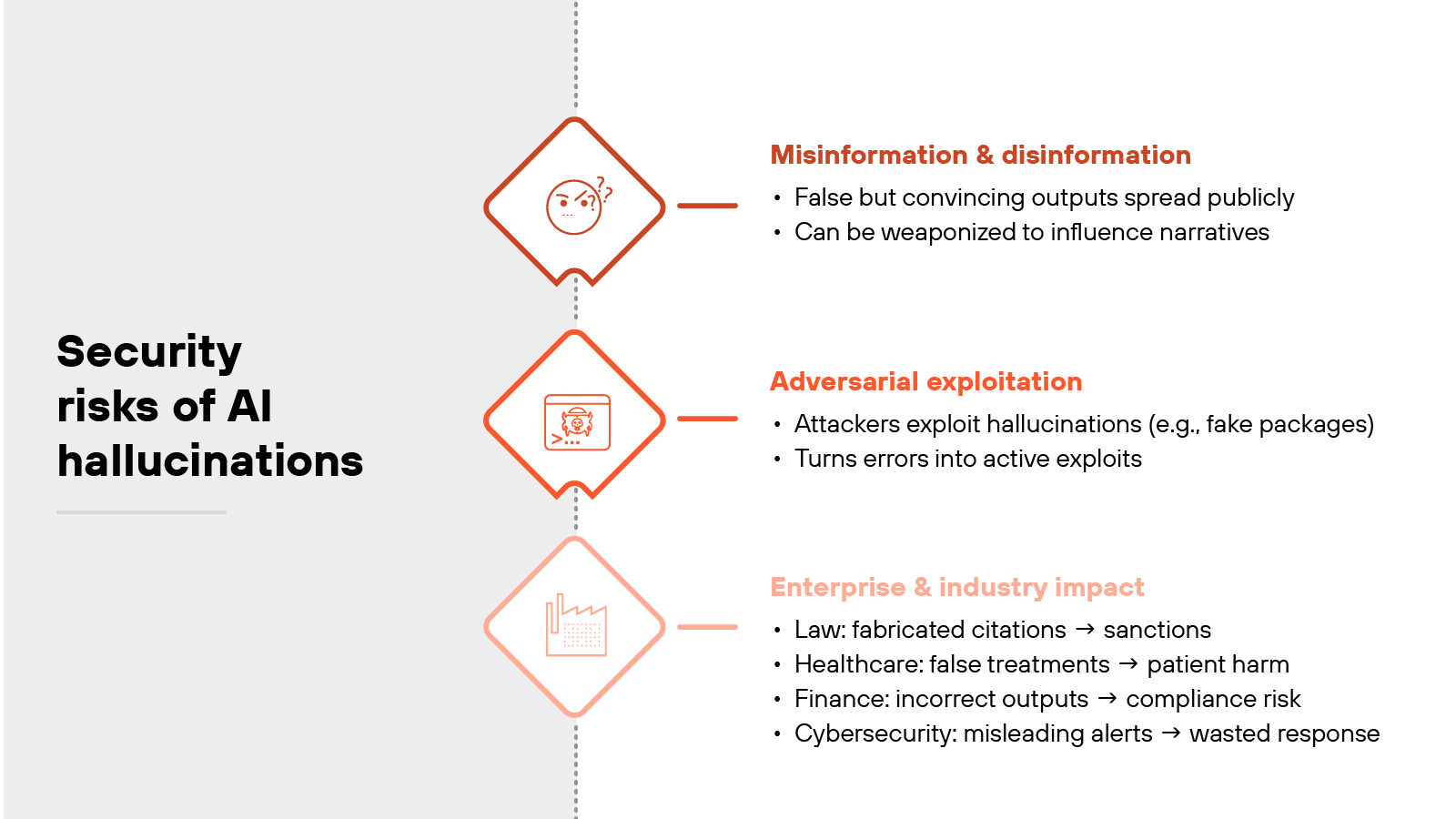

What are the security risks of AI hallucinations?

Hallucinations may seem like a reliability issue. But they also create generative AI security risks when false or fabricated content reaches critical workflows.

Let's take a look at how and why AI hallucinations matter in the context of cybersecurity.

Misinformation and disinformation potential

Hallucinations can generate outputs that look authoritative but are false. In public domains, this can accelerate the spread of misinformation.

In adversarial settings, actors can deliberately use hallucinations to produce persuasive but fabricated narratives. The result is content that undermines trust in digital systems.

Adversarial exploitation of hallucinations

Hallucinations can also be turned into attack vectors.

As mentioned, many developers have experienced coding assistants recommending non-existent open-source packages. Attackers then upload malicious code under those names.

When downloaded, the fabricated package becomes an entry point for compromise. This shows how a hallucination can move from an error to an exploit.

Enterprise and sector-specific consequences

The risks increase in sensitive fields.

- In law, hallucinated case citations can damage credibility and lead to sanctions.

- In healthcare, fabricated treatment information could put patients at risk.

- In finance, errors in outputs may affect decision-making and compliance.

- In cybersecurity itself, reliance on hallucinated outputs can misdirect analysts or security tools.

Implications for security frameworks and zero trust

Hallucinations reinforce the need for zero trust principles.

Outputs can't be assumed accurate by default. They have to be verified through controls, external data sources, or human review.

This aligns with cybersecurity frameworks that emphasize validation, monitoring, and least privilege. AI hallucinations require the same skeptical posture used for unverified network traffic or external inputs.

To sum it up: Hallucinations aren't just technical glitches. They create openings for misinformation, exploitation, and enterprise risk. Addressing them means treating AI outputs as potentially untrusted until verified.

See firsthand how Prisma AIRS secures models, data, and agents across the AI lifecycle.

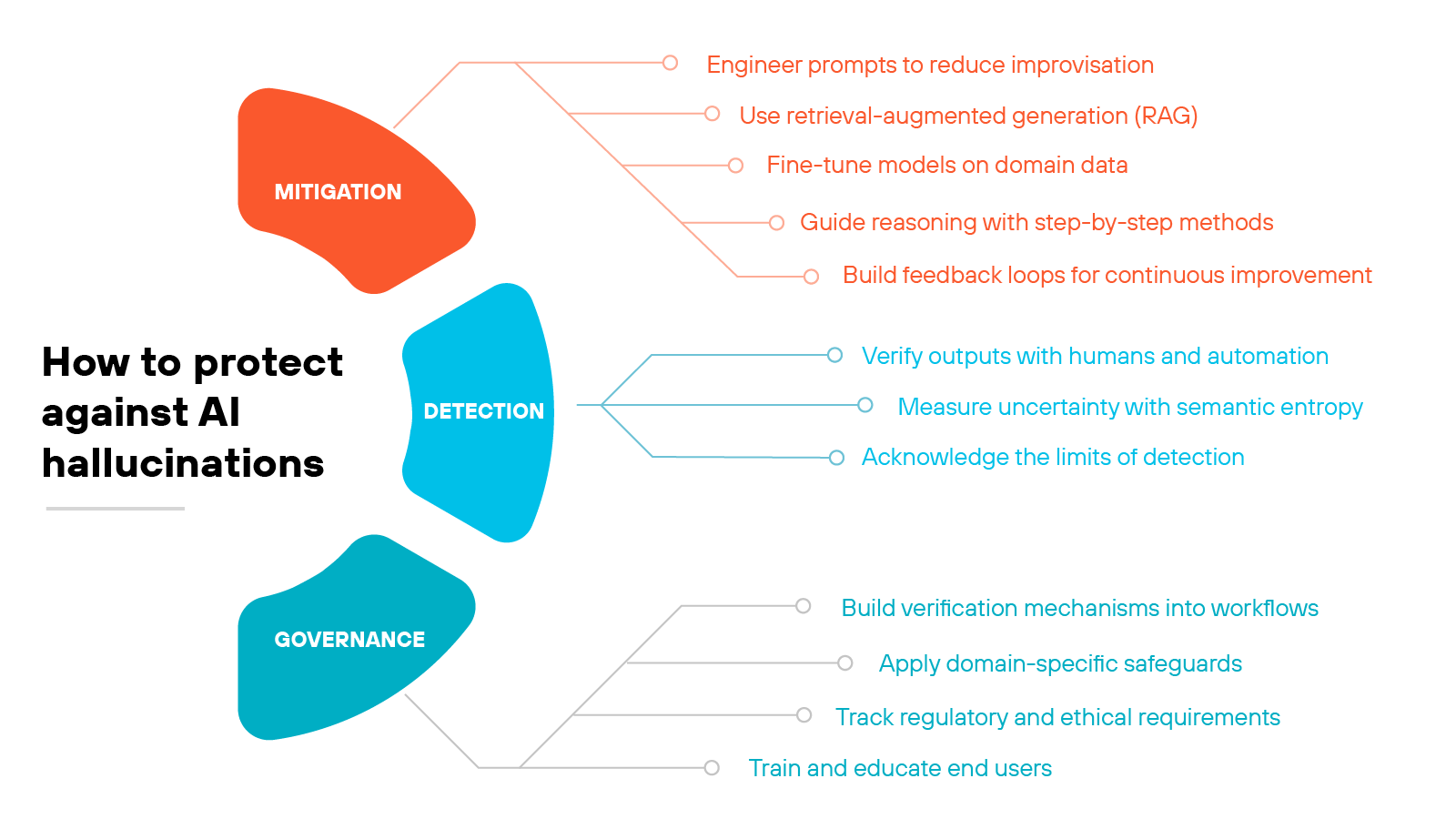

Launch demoHow to protect against generative AI hallucinations

Hallucinations can't be eliminated entirely. But they can be detected, reduced, and managed so their impact is lower.

Ultimately, the best protection involves a layered approach.

Let's break it down.

Detection

Detecting hallucinations is about recognizing when an output may not be reliable.

Here's how to approach it:

Verify outputs with humans and automation

Use human fact-checking for high-stakes outputs such as legal, medical, or financial text.

Cross-reference model responses with trusted databases, APIs, or curated knowledge bases.

Apply automation for scale but keep humans in the loop for critical cases.

Measure uncertainty with semantic entropy

Run multiple generations and measure variation in meaning. High variation suggests a higher chance of hallucination.

Use entropy alongside confidence scores to triangulate reliability.

Benchmark these methods in your specific domain since performance differs across tasks.

Tip:Combine multiple uncertainty measures. Confidence scores, semantic entropy, and variance across outputs catch different failure modes. Using them together improves coverage.Acknowledge the limits of detection

Recognize that some hallucinations are delivered with full confidence, making them difficult to catch.

Flag these limits internally so users don't assume detection guarantees accuracy.

Use detection as one layer, not a standalone safeguard.

Tip:Benchmark detection on domain-specific tasks. A method that works in open-ended Q&A may not perform as well in coding, legal, or medical contexts.

Mitigation

Once detection practices are in place, the next step is reducing how often hallucinations occur.

Here's how:

Engineer prompts to reduce improvisation

Write prompts that are clear, specific, and unambiguous.

Provide contextual details so the model has less room to guess.

Include fallback instructions such as "defer if uncertain" or "respond only when evidence exists."

Tip:Run prompts against known test cases to confirm whether they reduce hallucinations before deploying in production.Use retrieval-augmented generation (RAG)

Connect the model to external, verified data sources.

Anchor outputs to current facts rather than relying only on training data.

Apply RAG where factual grounding is critical, such as healthcare, finance, or cybersecurity.

Fine-tune models on domain data

Train on domain-specific datasets to reduce generalization errors.

Prioritize trusted, high-quality data in sensitive fields like law or medicine.

Narrow the scope of the model where possible to minimize error rates.

Tip:Revalidate fine-tuned models regularly, since drift can reintroduce hallucinations over time.Guide reasoning with step-by-step methods

Instruct models to break down problems into logical steps.

Use structured prompts (e.g., "show your reasoning process") to make outputs more transparent.

Validate reasoning steps against ground truth to avoid false confidence.

Tip:Test chain-of-thought outputs against known solutions — a step-by-step explanation can still be wrong.Build feedback loops for continuous improvement

Encourage users to flag hallucinations when they occur.

Feed corrections back into fine-tuning or RAG pipelines.

Track recurring errors to refine prompts, retraining, and governance policies.

Treat feedback as an ongoing cycle rather than a one-time fix.

Get an inside look at how to protect GenAI apps as usage grows.

Launch tourGovernance

Because hallucinations can't be eliminated, organizations need governance strategies to manage residual risk.

Here's what that looks like:

Build verification mechanisms into workflows

Integrate fact-checking before outputs reach end users.

Connect models to trusted databases and external APIs for validation.

Require a second layer of review in high-stakes fields like law or medicine.

Tip:Assign clear ownership of validation to compliance, legal, or domain experts to prevent diffusion of responsibility.Apply domain-specific safeguards

Restrict model use to well-defined, validated tasks.

Limit deployment to areas where subject matter expertise is available.

Reassess task scope regularly to avoid drift into unsupported domains.

Track regulatory and ethical requirements

Monitor emerging standards for disclosure, auditing, and accountability.

Document how hallucination risks are identified and managed.

Share transparency reports with stakeholders to set realistic expectations.

Train and educate end users

Teach employees to recognize and verify hallucinations.

Provide explicit disclaimers on model limitations in critical contexts.

Foster a culture of validation rather than blind trust in outputs.

Tip:Share internal incident reports of hallucinations to make risks concrete and emphasize the need for verification.

- What Is AI Governance?

- How to Build a Generative AI Security Policy

- Top AI Risk Management Frameworks You Need to Know

Remember: AI hallucinations aren't a traditional security flaw. They're a systemic feature of generative models. And the best defense is layered: detect when they happen, reduce how often they occur, and govern their use responsibly.

Schedule a personalized demo with a specialist to see how Prisma AIRS protects your AI models.

Book demoWhat does the future of hallucination research look like?

Hallucinations are unlikely to disappear completely. Research has shown they're inevitable because of how models predict language. Which means future work will focus less on elimination and more on detection and control.

One area of progress is uncertainty estimation.

As mentioned, studies on semantic entropy suggest that measuring variation in model responses can help flag when an answer is unreliable.4 This type of work may expand into tools that automatically score confidence for end users.

Another direction is model specialization.

Fine-tuning with domain data and retrieval-augmented generation reduce hallucination rates in practice. Researchers are testing how far these methods can go in fields such as healthcare, law, and cybersecurity.6

Governance is also an emerging priority.

Frameworks now emphasize transparency, user education, and safeguards that prevent unverified outputs from being trusted blindly. Although it's worth noting that balancing safeguards with usability remains a challenge.

Ultimately, future research is moving toward layered solutions that make hallucinations easier to spot, less frequent, and less harmful when they occur.

See firsthand how to control GenAI tool usage. Book your personalized AI Access Security demo.

Schedule my demoGenerative AI hallucinations FAQs

References

Kalai, A. T., & Vempala, S. (2024). Calibrated language models must hallucinate. arXiv preprint arXiv:2311.14648. https://doi.org/10.48550/arXiv.2311.14648

Xu, Z., Jain, S., & Kankanhalli, M. (2024). Hallucination is inevitable: An innate limitation of large language models. arXiv preprint arXiv:2401.11817. https://doi.org/10.48550/arXiv.2401.11817

Chelli, M., Descamps, J., Lavoue, V., Trojani, C., Azar, M., Deckert, M., Raynier, J. L., Clowez, G., Boileau, P., & Ruetsch-Chelli, C. (2024). Hallucination rates and reference accuracy of ChatGPT and Bard for systematic reviews: Comparative analysis. Journal of Medical Internet Research, 26, e53164. https://doi.org/10.2196/53164

Farquhar, S., Kossen, J., Kuhn, L., & Gal, Y. (2024). Detecting hallucinations in large language models using semantic entropy. Nature, 630, 123–131. https://doi.org/10.1038/s41586-024-07421-0

Wilson, S. (2024). The developer’s playbook for large language model security: Building secure AI applications. O’Reilly Media.

Li, J., Chen, J., Ren, R., Cheng, X., Zhao, W. X., Nie, J.-Y., & Wen, J.-R. (2024). The dawn after the dark: An empirical study on factuality hallucination in large language models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 10879–10899). Association for Computational Linguistics. https://doi.org/10.18653/v1/2024.acl-long.586